What to do when data is not normally distributed in statistics

The assumption of normality is a key requirement for many parametric statistical tests, such as t-tests and ANOVA. If you're unsure whether your data meet this assumption, it can raise valid concerns about the reliability of your results. In this post, we'll explore why normality matters, how to identify when your data deviate from it, and what steps you can take in response—or whether any action is needed at all. Whether you're an experienced data analyst or just starting out, understanding how to work with non-normal data is crucial for drawing sound conclusions. Let’s dive into some practical strategies and best practices to tackle this common issue.

Understanding the importance of normality in statistical tests

The normality assumption plays a critical role in controlling the likelihood of errors in statistical testing. Specifically, statistical tests are designed to limit the risk of a Type I error—concluding that there is an effect when, in fact, there is none. To manage this risk, we calculate the probability of obtaining results as extreme as those observed, assuming the null hypothesis is true; this is known as the p-value. We then compare the p-value to a predefined threshold, called alpha (commonly set at 0.05), to decide whether to reject the null hypothesis. This framework relies on several assumptions, most importantly, that the data (or more precisely, the sampling distribution of the test statistic) follows a normal distribution. When this assumption is violated, especially in smaller samples, the test statistic may not behave as expected, leading to inaccurate p-values and inflated Type I error rates.

In addition, the normality assumption is also crucial for controlling Type II errors—the probability of failing to detect a real effect. Power analysis, which is used to determine the sample size required to detect an effect with a given probability, is based on the assumption of normality. If this assumption does not hold, the resulting sample size calculations may be misleading, reducing the likelihood of detecting true effects.

In short, the normality assumption is fundamental to controlling both Type I and Type II errors, making it essential for the accuracy and validity of statistical conclusions.

Recognizing and diagnosing non-normal data

To determine whether your data deviate from normality, the first step is to look directly at the data. Begin with visualizations like histograms or density plots to get a general sense of the distribution’s shape. Q-Q (quantile-quantile) plots are especially helpful—they compare the quantiles of your data to those of a normal distribution, and deviations from the diagonal line suggest non-normality. Alongside visual inspection, formal statistical tests such as the Kolmogorov-Smirnov test can be used. These tests provide p-values that indicate whether the data significantly deviate from a normal distribution. Using both visual and statistical methods gives you a more complete and reliable assessment.

When you notice that your data stray from normality, it's worth digging into the reasons. The distribution might be nearly normal, but small deviations can arise from skewness, outliers, or mixtures of multiple subpopulations. In other cases, the data may follow a fundamentally different distribution, such as exponential or uniform. Measurement issues can also play a role—using imprecise instruments, discrete scales, or ordinal variables can compress or distort the data, making it harder to meet the normality assumption.

And if your data aren’t normally distributed? Don’t worry. There are many statistical techniques and adjustments available to handle non-normal data effectively. The key is to recognize the issue and choose methods that match your data’s characteristics.

Identifying causes of non-normal distribution in data

Sometimes, when working with data that's giving the normal distribution a pass, it's useful to dig into why. Extreme values and outliers can throw a wrench in the works, skewing our data and potentially leading us astray. These outliers might stem from measurement slip-ups, data entry blunders, or genuine anomalies that are part of the data's natural spread.

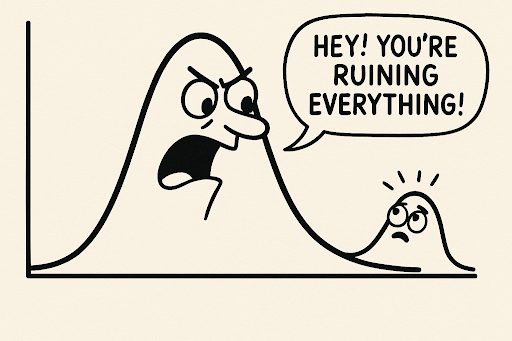

Another culprit behind non-normality is the mixing of multiple overlapping processes. Combining data from different sources or processes can result in bimodal or funky distributions. Imagine analyzing customer behavior from two distinct segments—the overlapping data might veer away from that neat bell curve we're used to.

Then there are natural limits and data boundaries. Data collected near a natural limit, like zero or a max value, can introduce skewness. This is especially common with physical measurements or scales that have a set range. Recognizing these boundaries helps us understand the twists in our data's distribution.

Spotting these causes is the first move in tackling non-normal data. By getting to the root of non-normality, we can make smarter choices about how to handle non-normal data.

Strategies for handling non-normal data

So, you're dealing with non-normal data—what now? Fortunately, you have several strategies at your disposal. Two common approaches are applying data transformations or using nonparametric methods. Each has its strengths and trade-offs, and the best choice depends on your data and your analytical goals. In fact, sometimes the best course of action is to do nothing at all.

One option is to use nonparametric methods, which don’t rely on the assumption of normality. Tests like the Mann-Whitney U test or the Kruskal-Wallis test are robust to skewed, heavy-tailed, or multi-modal distributions. They’re particularly useful when your data deviate substantially from normality. However, these methods often use ranks instead of raw values, which can make interpretation less intuitive. They may also have lower statistical power than parametric tests when deviations from normality are relatively minor. Alternatively, if you know the true underlying distribution of your data—even if it's not normal—you can use statistical models tailored to that distribution, such as generalized linear models (GLMs) or distribution-specific regression approaches.

Another powerful method is bootstrapping. This resampling technique lets us estimate statistics without leaning on normality. By repeatedly sampling from our original data, we build a sampling distribution of the statistic we're eyeing. This approach is handy for inference and hypothesis testing when standard assumptions don't hold

Another route is to stick with parametric tests but modify the data to better meet the normality assumption. This can be done through data transformations, such as applying a logarithmic, square root, or Box-Cox transformation. These techniques can reduce skewness, stabilize variance, and bring the distribution closer to normality, improving the validity of tests like t-tests and ANOVA. However, transformations can complicate interpretation, since the analysis is conducted on transformed values rather than the original measurement units. In some cases, addressing is more effective than transforming the entire dataset. If your data are nearly normal but contain extreme values, techniques like trimming, outlier removal, or winsorization can help mitigate their impact without altering the core structure of your data.

Finally, there are situations where you may not need to make any adjustments at all. Thanks to the Central Limit Theorem, the sampling distribution of the mean tends to become approximately normal as the sample size increases, regardless of the original data distribution. This means that with a large enough sample, parametric tests can still perform reliably even when the raw data aren’t perfectly normal.

Closing thoughts

Navigating the challenges of non-normal data is a vital skill in statistical analysis. Ultimately, your approach should reflect the type and extent of non-normality, the size of your sample, and the goals of your analysis. Understanding when normality matters—and how to handle deviations from it—is key to conducting valid, reliable statistical tests. At Statsig, we understand how common these challenges are, and we provide tools to help you navigate them with confidence.

Curious to learn more? Check out our blog for additional resources and deep dives into statistical topics.

Hope you found this useful!