Introduction to AI experimentation

Remember when testing AI meant months of offline model training before you'd know if anything actually worked? Those days are gone.

If you're still treating AI development like traditional software - with long release cycles and careful offline validation - you're missing out on what makes today's AI development actually exciting.

The shift to online experimentation isn't just a trend; it's a fundamental change in how successful AI products get built. Instead of guessing what users want, you can now launch features quickly, learn from real interactions, and iterate based on actual data.

The evolution of AI experimentation

AI experimentation used to be a slow, painful process. You'd collect massive datasets, train models offline for weeks, and hope your assumptions about user needs were correct. By the time you launched, the market had often already moved on.

But something fundamental changed with the rise of pre-trained models and APIs. Suddenly, you didn't need months of data collection just to get started. The Pragmatic Engineer captures this shift perfectly - AI engineering now focuses on leveraging readily available resources rather than building everything from scratch.

This democratization means you can prototype AI features in days, not months. Launch something basic, see how users actually interact with it, then improve based on real feedback. The teams winning in AI aren't the ones with the biggest models - they're the ones learning fastest from their users.

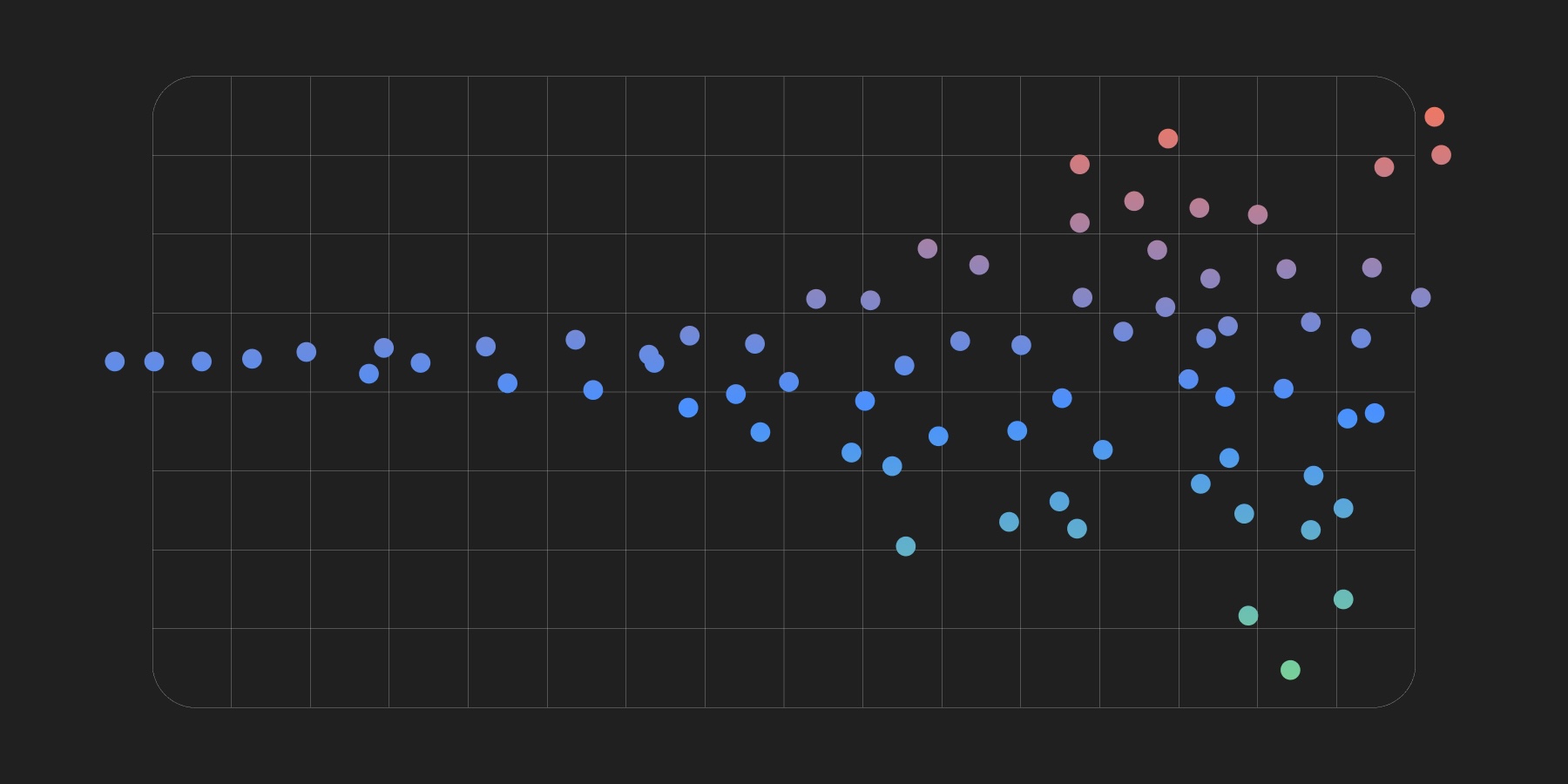

Online experimentation makes this rapid learning possible. Instead of guessing, you're getting continuous signals about what works. Statsig's research shows that companies embracing this approach ship better AI features faster, because they're building on actual user behavior rather than assumptions.

The key insight? Your first AI feature doesn't need to be perfect. It just needs to be good enough to start learning.

The importance of online experimentation in AI development

Here's the thing about AI features - they're unpredictable. What looks amazing in your test environment might completely fail with real users. That's why online experimentation isn't optional anymore; it's how you survive.

Real-time AI experiments give you three critical advantages:

Immediate feedback on whether users actually find your AI helpful

Data on edge cases you never imagined during development

The ability to fix problems before they affect everyone

But speed creates its own challenges. How do you ship fast without breaking things? The answer lies in systematic experimentation. Tools like Statsig handle the complexity with feature gates, standardized logging, and statistical analysis that actually makes sense.

The magic happens when you start making real-time adjustments. User engagement dropping? Roll back the change to 50% of users while you investigate. Found a prompt that works better? Test it with a small group first. This isn't just risk management - it's how you find breakthrough improvements.

Developing with an experimentation-first mindset fundamentally changes how you approach AI features. Instead of big bang releases, you're constantly shipping small improvements. Each experiment teaches you something new about your users and your product.

Best practices for effective AI experimentation

Adopting an experimentation-first mindset sounds great in theory, but what does it actually mean day-to-day? Start by accepting that your first idea probably won't be the best one. This approach to AI development treats every feature as a hypothesis to test, not a finished product to ship.

Feature flags and A/B testing become your safety net. Want to try a new AI model? Roll it out to 5% of users first. Curious if a different prompt improves responses? Test both versions simultaneously. When experimenting with generative AI apps, this gradual rollout approach saves you from catastrophic failures while still moving fast.

The tricky part is balancing speed with precision. You want to iterate quickly, but not so fast that you're making decisions on noise rather than signal. Successful AI products find this balance by starting simple and adding complexity only when data justifies it.

A structured approach to AI experimentation typically follows this pattern:

Launch with the simplest possible implementation

Measure actual user behavior (not just model accuracy)

Identify the biggest pain points from real usage

Test improvements on small user segments

Scale what works, kill what doesn't

But here's what really matters - collaboration between teams. Your product folks understand user needs. Engineers know what's technically possible. Data scientists can interpret the results. As Chip Huyen discusses, the best AI features emerge when these groups work together, not in silos.

Tools and strategies for successful AI experimentation

Let's talk about what actually makes AI experiments work in practice. The right tools can mean the difference between shipping weekly improvements and getting stuck in analysis paralysis.

Platforms like Statsig handle the heavy lifting of experiment management. You get visibility into both user metrics (engagement, retention) and model metrics (latency, accuracy) in one place. This dual view is crucial - a model might be technically impressive but still hurt user experience.

Standardized event logging might sound boring, but it's your secret weapon. When every AI interaction is logged consistently, you can:

Compare different model versions fairly

Spot patterns in user behavior

Identify edge cases that break your AI

Make decisions based on data, not gut feelings

The real power comes from testing multiple changes simultaneously. Maybe you're trying a new model while also tweaking the UI. Good experimentation tools let you understand the impact of each change independently, avoiding the confusion of "did it work because of the model or the interface?"

Building a culture of experimentation takes more than just tools though. The teams thriving with generative AI share a few traits: they celebrate learning from failures, they ship small changes frequently, and they trust data over opinions.

Effective AI experimentation combines hard data with human judgment. Data tells you what's happening, but you still need intuition to form good hypotheses. The teams that excel at this balance - using data to validate ideas while staying creative in their approach - are the ones building AI features users actually love.

Closing thoughts

The shift from offline to online AI experimentation isn't just a technical change - it's a mindset shift. Instead of trying to perfect your AI in isolation, you're learning directly from users. This approach feels riskier at first, but it's actually the safest path to building AI features people want.

The key takeaways? Start simple, experiment constantly, and let user data guide your decisions. Use tools that make experimentation easy, not painful. And remember - your first version doesn't need to be perfect, it just needs to teach you something.

Want to dive deeper? Check out Statsig's guide to developing AI features or explore how companies like Netflix and Spotify approach AI experimentation. The best way to learn is to start experimenting yourself.

Hope you find this useful!