Ever wonder how some websites just seem to know exactly what grabs your attention? It's not magic—they're using testing methods to optimize user engagement. If you've heard of A/B testing, you're on the right track. But let's take it a step further with multivariate A/B/n testing.

In this blog, we'll dive into how you can use this powerful tool to fine-tune your website's design and content. We'll explore how testing multiple elements at once can uncover the perfect combination that resonates with your audience. So, let's jump in and start optimizing!

Understanding multivariate A/B/n testing for user engagement

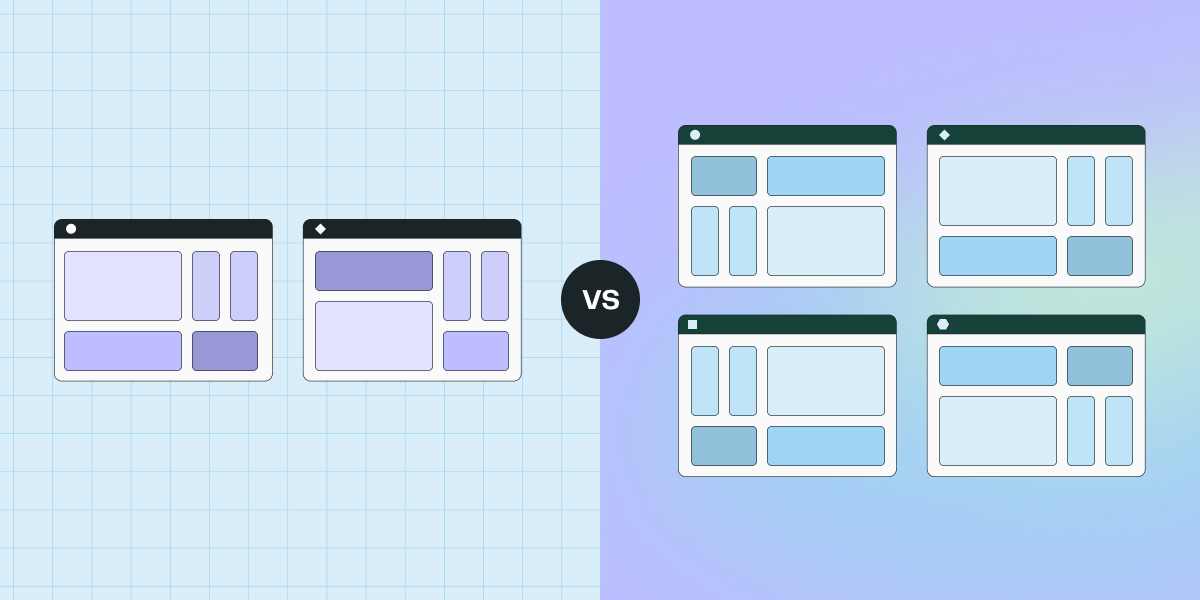

A/B/n testing compares multiple webpage versions to identify which variant performs best. Meanwhile, multivariate testing evaluates combinations of specific element changes. Both methods are crucial for optimizing user experience through data-driven strategies.

Multivariate testing lets businesses assess the impact of various design elements all at once. By testing different combinations of headlines, images, and call-to-action buttons, you can gain comprehensive insights into user preferences and interactions.

Using multivariate testing means making informed decisions based on solid data, not just assumptions. This approach helps you find the most effective combinations of elements, leading to improved user engagement and higher conversion rates.

A/B testing is already a powerful tool for enhancing digital experiences, as demonstrated by the surprising power of online experiments. By continuously testing and refining digital platforms, businesses can meet user needs more effectively, driving growth and customer satisfaction.

Ready to design some effective experiments? Let's explore how to get started.

Designing effective multivariate A/B/n experiments

Identifying the right elements to test is key for impactful multivariate testing. Focus on CTAs, layouts, and content that directly influence user behavior and conversion rates. Structure your tests to evaluate combinations of changes across multiple variables simultaneously.

Ensuring statistical significance is vital. Use adequate sample sizes and audience segmentation for reliable results. As A/B testing experts note, randomization and blocking can help minimize external influences. Remember, multivariate testing generally requires more traffic than simple A/B tests to achieve significance.

When designing your experiment, clearly define your objectives and develop hypotheses for each element being tested. Select appropriate metrics to measure success, such as click-through rates or conversion rates. Choose between full factorial or partial factorial designs based on complexity and resources.

Interpreting results involves identifying patterns and verifying statistical significance. Look for combinations of elements that positively impact your key metrics. And be open to unexpected findings—they may reveal new optimization opportunities.

Excited to see how your tests perform? Let's dive into analyzing the results.

Analyzing and interpreting multivariate A/B/n test results

Interpreting complex data from multivariate A/B/n tests requires a systematic approach. Segment results by key user characteristics to uncover patterns and preferences. Employ statistical analysis to determine if the differences between variations are significant.

Identifying optimal combinations of elements is crucial for boosting user engagement. Analyze the performance of each variation against key metrics like conversion rates and time on site. Look for synergies between elements that consistently outperform others.

Making data-driven decisions based on test outcomes is essential for refining UX. Prioritize changes that show the most significant positive impact on user behavior. Continuously iterate and test to validate improvements and ensure ongoing optimization.

A/B testing is a powerful tool for enhancing user experience. By analyzing multivariate test results, you can uncover valuable insights into user preferences. Applying these findings to your UX design can lead to significant improvements in engagement and conversion rates.

Wondering how to navigate challenges and follow best practices? Let's explore that next.

Best practices and overcoming challenges in multivariate A/B/n testing

Multivariate testing offers valuable insights but comes with increased complexity and resource demands. To tackle these challenges, prioritize tests based on potential impact and use robust platforms like Optimizely or Statsig to streamline the process.

Ensure you have adequate traffic for statistically significant results, and maintain detailed documentation for continuous learning. Randomize user allocation to variants and keep external factors constant during testing to avoid bias.

Embrace a culture of continuous testing and iteration to achieve ongoing user experience optimization. By consistently applying insights from multivariate tests, you can align your digital platforms with user preferences and drive growth.

Remember, even small changes can have a substantial impact. For example, Microsoft's successful experiment with opening Hotmail links in new tabs showed how a minor tweak led to significant results. Focus on incremental improvements and stay open to unexpected findings that could guide new optimization directions.

By leveraging the power of multivariate A/B/n testing and following best practices, you can unlock smarter campaign results, enhance user satisfaction, and achieve your business objectives. Tools like Statsig make it even easier to implement and analyze these tests effectively.

Closing thoughts

Multivariate A/B/n testing is a game-changer for optimizing user engagement. By testing multiple elements simultaneously, you can discover the perfect combination that resonates with your audience. Whether it's tweaking headlines, images, or call-to-action buttons, this approach helps you make data-driven decisions that boost conversions.

Ready to dive deeper? Check out resources like Optimizely and Statsig for tools and guidance on multivariate testing. Don't hesitate to experiment—the insights you gain could transform your digital strategy.

Hope you found this useful! Happy testing!