Today we’re announcing the launch of Statsig’s new Pulse Stats Engine.

Pulse is Statsig’s experimental analysis tool, and our first Stats Engine helped grow Statsig over the last 11 months. But we’re constantly improving while listening to customer feedback. Our new Stats Engine has two major technical updates:

Changes the unit of analysis from a user-day to a user

Uses Welch’s t-test for small sample sizes

These changes have the following benefits for product-based experimentation.

1. Easier interpretability of results.

Units: The units field in our metrics raw data view previously represented the number of user-days we’ve counted, and has been a major source of confusion. By changing the unit of experimentation, this column will now represent the total number of exposed users.

Mean: Results will be much clearer to understand. For example, event_count metrics will now report means as the average number of events per user across the experiment, instead of events per user per day. This works especially well for signup experiments as this value will be a 1 or a 0, independent of the experiment’s duration. This has been one of our top metrics request and will no longer require a custom metric.

2. Trustworthy Confidence Intervals as validated through actual and simulated AA tests.

These changes improve confidence intervals across the board:

For long-term experiments: This change eliminates biases originating from cross-correlation across days. Our previous stats engine assumed days were independent which was a safe assumption for most experiments we’ve seen, but can lead to underestimating confidence intervals in some cases (eg. long-term experiments and holdouts).

For small-scale experiments: With the introduction of Welch’s t-test, we see better confidence intervals for small-scale experiments (sample sizes < 100 exposures).

The new stats engine has been automatically applied to all new and ongoing experiments (as of March 24th), with data backfilled from the start of the experiment. Concluded experiments will still show results from the old stats engine. Customers may notice a small change in calculated deltas, and should see differences in the estimated confidence intervals. In most cases, confidence intervals will increase (especially for long-term experiments) and in other cases the confidence intervals will shrink (due to lower metrics variability). Across all Statsig customers and experiments, most results will remain unchanged but a small percentage will lose statistical significance, and a smaller fraction will begin to show statistical significance.

Our online and simulated AA tests consistently show that the new stats engine can reduce false positive rates. However, these improvements come with less flexibility in picking the date range (for precomputed metrics). Our old stats engine precomputed metrics for each day and could instantly aggregate different time slices (which was one reason we had selected the user-day analysis unit). Going forward, Pulse will automatically precompute the full experiment duration (start to end/last date) by default. We will support time slicing as follows:

Flexible end date — You’ll still be able to adjust the end date of your time window and see instant results. The start date will be fixed as the first day of the experiment.

Fixed 7/14/28-day windows — We will provide precomputed 7/14/28-day intervals that can flexibly be applied to any date (eg. see the second week’s data, or the last 7 days).

Flexible start and end dates — You will still be able to select any start and end date, and this feature will now be supported by the recently launched Custom Queries feature. This will return Pulse results for any time window within minutes.

We are still backfilling this data, and not all of the precomputed data will be immediately available.

As we continue to improve our Stats Engine, we’d love to answer any questions or go deeper with you on our research and conclusions. As always, feel free to reach out to us, we are available to answer any questions. The Statsig team can be reached at support@statsig.com and is always available on Slack to answer any questions about these improvements.

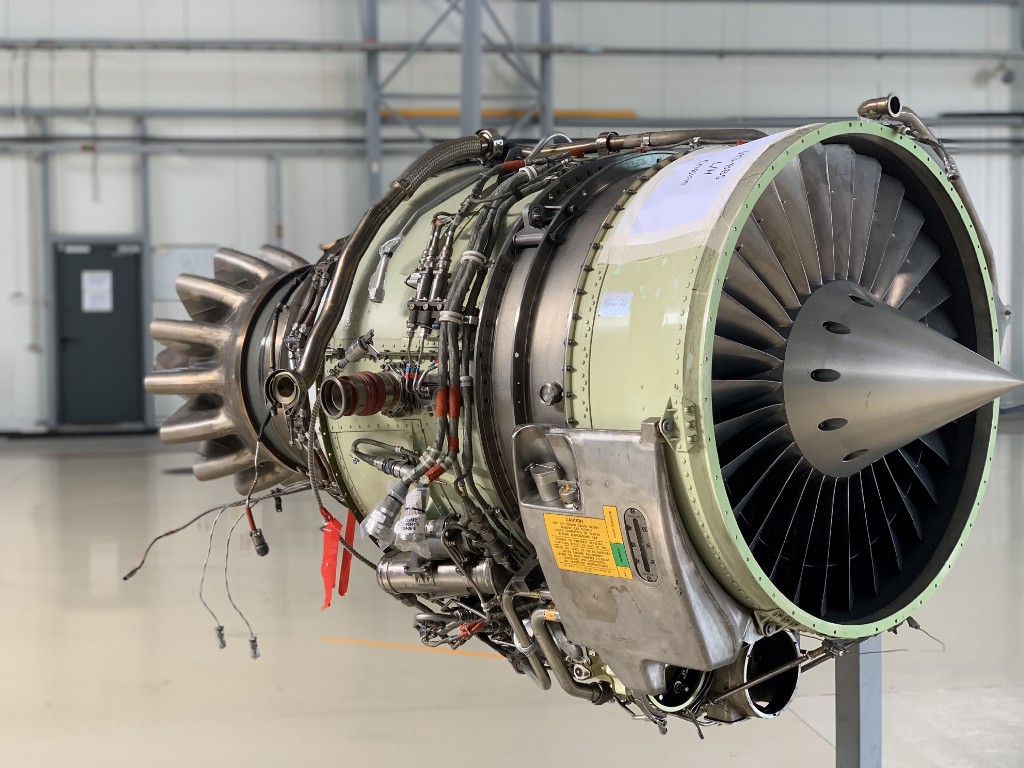

Thanks to Kaspars Eglitis on Unsplash for the jet engine photo!