Inspiration comes at all times. And when it comes to creativity, there are no “business hours” for builders.

If you are a developer, you know this all too well. You are in the zone building things and want to try out a new library or service that solves a problem for you. This can happen during the day or in the middle of the night, and it’s never a convenient time to call a sales team or commit to a year-long service contract. Those decisions break your flow and momentum.

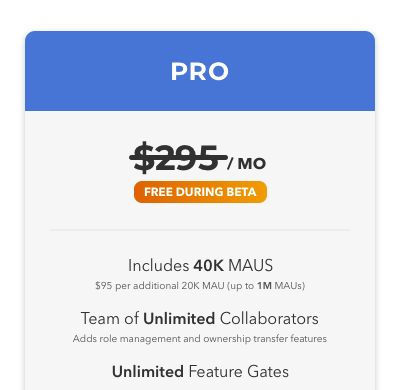

We believe in making this process totally frictionless. That’s why we decided to make our products self-serve with transparent usage-based pricing.

If you are a developer and want to try out the product, you can sign-up for a free-tier account, integrate our open-source SDK and be on your way in a few minutes. No long-term contracts, no trials, no credit cards, no “put in your email address so we can have someone contact you”, and definitely no 1–800 numbers. You won’t have to worry about paying anything until you hit usage thresholds, at which point we’ll gently ask you to move to a paid tier.

This is based on our firm belief that we win only when we help you win.

The reality is that we do incur expenses for every free account we offer. Expenses like cloud infra, deployment, monitoring, etc., but we believe in a fair pricing model that correlates strongly with the value we provide for your products. Hence, we made our paid tier pricing start low and scale with your success all the while subsidizing small emerging developers, who we could all relate to.

There’s one other thought that went into this process. While our costs grow with the number of events you logged, it sucks if you have to constantly worry about what events to log and how many feature gate checks to make. So we removed that from the equation and we want you to log as many events, check as many feature gates, or pull as many dynamic configurations as you need to. Hence we snapped our pricing to your MAUs, which is typically something that you care about growing, and as we said before, we win when you win.