When I joined Facebook, one of the things that surprised me was the autonomy Engineers had in building new products and features, and how this enabled them to move really fast.

I was curious how decisions were made around product features, since based on my previous experience, the bulk of the time sink happened inside conference rooms — where experienced engineers and PMs debated the details, made decisions, and wrote design docs, all before the first line of code was even written.

Product development at Facebook often started with the germ of an idea which was quickly translated into code.

If people wrote code right away, how did they make sure they were building features users actually wanted?

Facebook’s internal developer infrastructure has a set of tools that enables and encourages engineers to always stay in the Build->Measure->Learn->Build loop. Three of these are used by almost everyone on a daily basis:

1. Gatekeeper: Allows developers to build features visible only to a targeted set of users. For example, these could be dogfooders and testers until a feature is ready for public consumption. Engineers will then open it up to a small user base to validate everything is working well before launching to the world.

Gatekeeper also comes in handy when you have release dependencies, such as client and server code, each with its own release cadence. You don’t want to hold each of them back because the features are landing at different times. Instead, you would keep the feature turned off until you can verify that the versions on server and client rollouts are compatible, and then you turn on the feature.

Another important use case is that sometimes strings take longer to be localized so Gatekeeper will initially deploy a feature to just English users until localization is complete.

And the biggest peace-of-mind comes from knowing that when something goes really wrong at any point, you can easily turn features off in real-time.

2. Quick Experiments (A/B): This tool takes the debates out of conference rooms and puts hypotheses to test in production. Instead of endless discussions about the “perfect” design, the amount of flexibility to provide, the corner cases to cover, engineers quickly code up different variants and put them out in front of users. How users interact with the product gives rich insights into what works and what doesn’t.

Sometimes it’s also a good idea to validate if we should actually build something. And in some cases, an MVP (Minimum Viable Product) might actually be trivial to build. In either scenario, Quick Experiments becomes an indispensable tool to quickly and accurately validate product hypotheses.

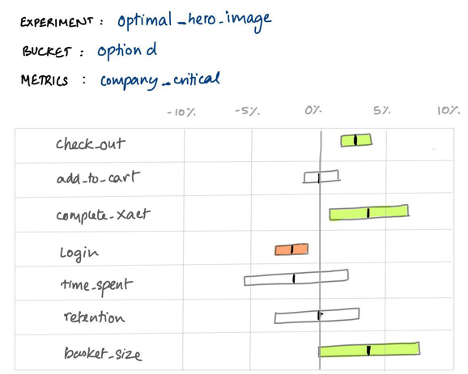

3. Deltoid: When you have many engineers, each building new features and running simultaneous experiments, it’s important to know the causal impact on overall product health, such as user engagement. If there’s an influx of new transactions today, it would be useful to understand which feature drove that. Similarly, it’s also important for engineers to be confident that their features aren’t hurting company critical metrics through unanticipated side-effects.

Deltoid gives a visual map of how all company critical metrics are affected by every new feature, each new client version, and even provides breakdowns by macro trends like mobile OS adoption.

Without the cause-and-effect measures provided by Deltoid’s exhaustive command of A/B testing, debugging problems and identifying root cause is exceptionally time-consuming, imprecise, and sometimes impossible.

Having seen firsthand the power of these tools and how they enable a company like Facebook to move fast, I am convinced that we can bring that power to everyone. Big companies shouldn’t be the only ones with such sophisticated tools — it should be liberated and made accessible and available to developers, data scientists, and product managers.

This is my inspiration for Statsig, and that’s the story of how we came to be. We are just getting started, so feel free to follow us along at www.statsig.com.