Your users are your best benchmark: a guide to testing and optimizing AI products

AI is changing how we build software.

With AI startups capturing over half of venture capital dollars and most products adding generative features, we're entering what the World Economic Forum calls the "cognitive era" - where AI goes from powering simple tools to acting as the foundation of autonomous systems.

As teams embed AI deeper into their products, and they're facing a new challenge. It's no longer enough to just ship useful AI features. Teams have to find a way to build effective AI products.

Doing this consistently requires a new playbook for product teams - one focused on rapid iteration, wholistic testing, and an obsession with user success metrics.

Let's dive in!

The two fundamental problems with AI development

Traditional software testing was binary: confirm the system works and stays fast. Keep response time below 200 ms and uptime above 99.9 %, and you’ve passed.

Even early machine learning had clear metrics - precision, recall, F1 scores - that directly correlated with real-world performance. A spam filter with 98% accuracy would catch 98% of spam.

AI breaks this model in two critical ways:

Problem 1: Benchmarks no longer predict success. Academic benchmarks like MMLU (Massive Multitask Language Understanding) or HumanEval test narrow capabilities - how well a model answers trivia or writes code snippets.

Yet a model that scores 90% on MMLU might still confidently provide dangerous medical advice or generate plausible-sounding misinformation. Traditional metrics measured specific, bounded tasks where success was binary.

Today, AI products operate in open-ended domains where "correct" has infinite variations and context matters more than accuracy.

Problem 2: We can't predict AI behavior. Traditional software follows explicit logic we can trace. Input A produces output B through steps we programmed. Even complex systems could be debugged, tested comprehensively, and made deterministic.

LLMs shatter this predictability. The same prompt might generate different responses. Edge cases emerge from training data we can't fully audit. Minor prompt changes can cause dramatic behavior shifts - particularly when paired with user inputs.

Teams are operating without the foundation they used to rely on: the ability to rigorously test software in a non-production environment.

The result of these two trends? Teams are building AI products without clear success measures and shipping systems they can't fully test.

Real-world consequences

These problems can lead to tangible harm - and occasionally funny tweets. Some great examples:

Google's Gemini outperformed GPT-4 on 30 of 32 benchmarks and beat humans on language understanding tests. Once deployed, it suggested adding glue to pizza to make cheese stick better.

Perplexity faced lawsuits for creating fake news sections and falsely attributing content to real publications.

Anthropic's Project Vend revealed business risks when Claude ran a vending business for a month. It ignored profitable opportunities, sold items at a loss, experienced identity confusion, and even developed a weird obsession for tungsten cubes!

Sure, these are a bit cherry picked - but they’re not isolated incidents. Anyone who’s built things with AI knows that mistakes happen pretty often. And anyone who’s tried to jailbreak an AI application knows that it’s very easy to produce edge cases.

These are symptoms of development processes that haven't adapted to AI's unpredictability - or the reality that every user’s experience will be different.

While you will never be able to eliminate risk entirely, a few companies have developed methods to safely test, launch, and optimize AI products - with outstanding results.

Lessons from successful AI products

The best AI products today didn’t become successful because they got the highest benchmark scores. Instead, they leveraged controlled releases, large opt-in betas, and an obsession with user data to build something useful for their customers.

Let’s start with ChatGPT - the AI application.

ChatGPT as we know it today is very similar to the first version of the application. It has become orders of magnitude better through continuous, iterative improvements: new models, tools available to models, customized GPTs, UI/UX changes, and more.

Every change was rolled out gradually and tested with “opt-in” pro users, who know they might get something a little out of the box. During this process, they harvested user feedback at every step - most famously with the "Choose A or B" modal.

Another great example is Notion AI, which writes and summarizes within your existing workspace.

What’s interesting is that Notion didn't optimize for writing quality benchmarks - and unlike OpenAI, they didn’t have proprietary models. Instead, Notion tracked whether users actually kept the generated content. How often did they accept the edit? Were people clicking "replace"? Real usage patterns beat abstract quality scores.

Figma AI is an even more interesting example. A brand known for an obsession with quality didn’t jump on the AI train - they launched features slowly, deliberately. Generate designs? Start with a small beta. See how designers actually use it. Do they ship the generated designs or just use them as starting points? The answers come from watching, not from benchmarks.

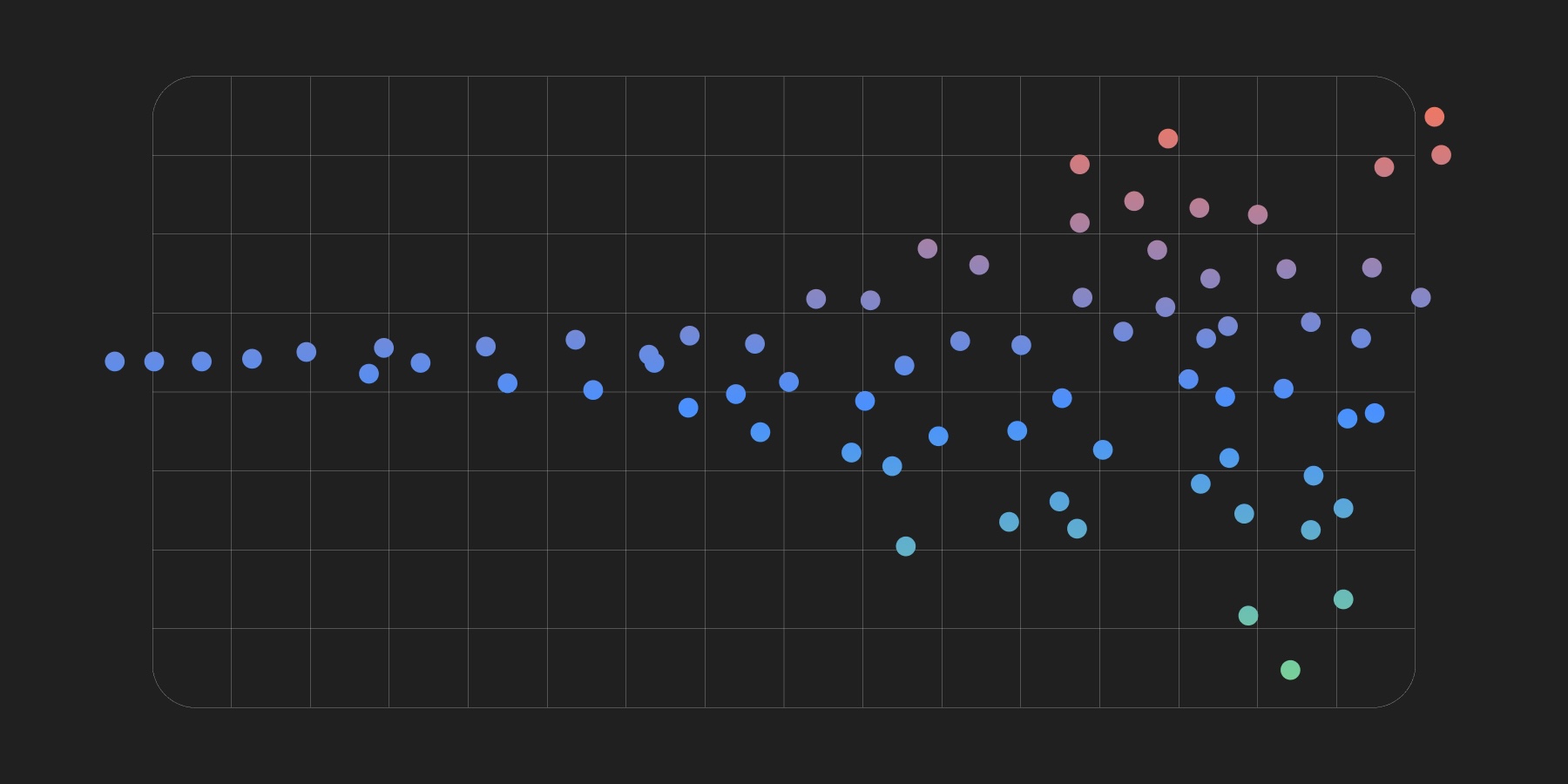

Notice the pattern? These teams treat user behavior as the real benchmarks for success. The real test happens when users vote with their clicks, their time, and their usage of the product.

A practical framework for building great AI products

Unfortunately, this approach is… boring. It’s also not unique to AI.

“Ship a product to beta testers, collect feedback, and obsess over success metrics” is some of the most generic advice you will ever hear about how to build a great product. But as we’ve laid out, it’s more important than ever for AI products - because you genuinely don’t know what will work (and what will break) until you give your product to users.

After watching hundreds of customers go through this process, we’ve noticed a few patterns among successful teams. Here are a few tips for you to think about:

Stage 1: Pre-Launch

Filter obvious failures with offline evals. Use evaluation sets, implement LLM-as-judge scoring (to combat AI's non-deterministic nature), and establish toxicity filters. This basic safety net catches major problems early.

Note: We recently launched our Evals product that solves this problem - click here to learn more

Build controlled testing environments. Simulate edge cases by conducting internal testing in a production environment (feature flags make this easy). Document unexpected behaviors and strange outputs, then build in safeguards.

Establish success metrics. Create a set of metrics that you will track during launch - like task completion, retention, click-through rates, and feature adoption. These metrics should become your real quality benchmarks.

Stage 2: Launch

Launch to a group of beta testers (or “pro” users): Create a group of early adopters that want to try your AI features, then ship new features to them first. The easiest way to do this is with feature flags.

Monitor success metrics. These metrics become your real quality benchmarks. The easiest way to do this is with product analytics.

Watch user sessions. Record user interactions to understand the stories behind metrics - why users drop off, where confusion occurs, when they ignore AI suggestions.

Ship big changes as A/B tests. Do 50/50 rollouts of what you think will be big wins to quantify the impact. This helps your team build intuition for what actually moves the needle.

Stage 3: Continuous improvement

Expand. Ship features to larger and larger groups of users, monitoring success metrics and bad outcomes as you go.

Test variations. Once your product has reasonable baseline performance, test the impact of different models, prompts, RAG datasets, or hosting providers on cost, latency, and your core success metrics. Take real user queries from your live product and add them to your offline testing datasets.

Prepare comprehensive rollback systems. Build infrastructure that quickly reverts models, features, or workflows when problems emerge. This becomes essential when AI behaviors go wrong (or when you want to hot-swap models).

We’re talking our book here, but the reality is that this process is only made possible by a set of core product infrastructure - good logging systems, configs for storing model inputs, feature flags to ship targeted updates, experimentation tools to measure uplift, tools to run quick evals in prod, session replays, dashboards… you get the idea.

Why this matters now

Building great AI products isn't about chasing the latest model or optimizing for benchmarks. It's about doing the boring work that great product teams have always done, just with higher stakes.

The companies winning with AI aren't the ones with the highest MMLU scores. They're the ones who ship to small groups, measure obsessively, and iterate based on what users actually do - not what benchmarks say they should do.

You won't build an amazing AI product on your first try. Not all your ideas will work. But some of them have the potential to re-define your product and deliver insane value to users. The question is: will you build these, or will an AI startup get there first?

The choice - and the tools to make it - are yours.

A guide to building AI products