How can you ensure your insights are reliable and not just random chance?

This is where the concept of statistical significance comes into play, helping you make confident choices based on solid data.

Statistical significance is a mathematical method that measures the reliability of your analytical results. It helps you determine if the relationships or patterns you observe in your data are genuine or merely coincidental.

By testing for statistical significance, you can distinguish meaningful signals from random noise, ensuring your data-backed decisions are built on a strong foundation.

Understanding statistical significance

Statistical significance is a crucial concept in data analysis that helps you assess the reliability and validity of your findings. It's a measure of how likely it is that the observed results in your data are due to a real effect or relationship, rather than just random chance. In other words, statistical significance helps you determine if the patterns or differences you see in your data are meaningful and not just flukes.

When you're analyzing data, you're often looking for patterns, trends, or relationships between variables. However, it's important to recognize that some of these observations could be due to random variation rather than a genuine effect. This is where testing for statistical significance comes in. By calculating the probability that your results could have occurred by chance alone, you can gauge the reliability of your findings.

Statistical significance plays a vital role in helping businesses make confident, data-driven decisions. By ensuring that the insights you glean from your data are statistically significant, you can:

Avoid making decisions based on false positives or random noise

Identify genuine patterns and relationships that can inform strategic choices

Allocate resources and investments towards initiatives with proven impact

Minimize the risk of costly mistakes or missed opportunities

When you're testing for statistical significance, you're essentially asking, "If there was no real effect or relationship, how likely is it that we would have observed these results by chance?" The lower this probability (known as the p-value), the more confident you can be that your findings are meaningful and not just random occurrences.

Formulating hypotheses and choosing significance levels

Hypotheses are essential for statistical testing. The null hypothesis assumes no significant difference or effect, while the alternative hypothesis contradicts the null. For example, when testing if a new feature increases user engagement, the null hypothesis would be that engagement remains unchanged.

Significance levels, denoted by α, represent the probability of rejecting a true null hypothesis (Type I error). Common choices are 0.01 and 0.05, meaning a 1% or 5% chance of incorrectly rejecting the null. A lower α reduces false positives but may miss true effects.

Choosing the right significance level depends on the consequences of Type I and Type II errors. If false positives are more costly than false negatives, a lower α is appropriate. Sample size also matters; larger samples can detect smaller effects at the same α.

To test statistical significance effectively:

Clearly define your null and alternative hypotheses based on the question you're investigating

Select an α that balances the risks of Type I and Type II errors for your specific context

Ensure your sample size is adequate to detect meaningful differences at your chosen α

Multiple testing correction is crucial when testing many hypotheses simultaneously. Methods like the Bonferroni correction and the Benjamini-Hochberg procedure help maintain the overall Type I error rate by controlling for family-wise error rate (FWER) and false discovery rate (FDR) respectively. Without adjustment, the chance of false positives increases rapidly with the number of tests.

By carefully formulating hypotheses and selecting appropriate significance levels, you can draw reliable conclusions from your data. Understanding how to test statistical significance empowers you to make data-driven decisions with confidence.

Collecting and analyzing data

Gathering high-quality data is crucial for accurate statistical analysis. Data collection methods include surveys, experiments, observations, and secondary data sources. Ensure data is representative of the population and minimize bias.

Statistical tests help determine if observed differences are statistically significant. Different statistical tests will be appropriate for different situations. T-tests compare means between two groups, while ANOVA compares means across multiple groups. Chi-square tests assess relationships between categorical variables, and Z-tests compare a sample mean to a population mean.

When testing statistical significance, it's essential to select the appropriate test based on your data and research question. Consider factors such as data type, distribution, and independence of observations. Clearly define your null and alternative hypotheses before conducting the analysis.

Sample size directly impacts the power of statistical tests. Larger samples increase the likelihood of detecting significant differences. Determine the appropriate sample size based on the desired level of significance and effect size. This determination is often called a “power analysis”.

Interpreting results requires understanding the p-value and significance level. A p-value below the chosen significance level (e.g., 0.05) indicates a statistically significant result. However, statistical significance doesn't always imply practical significance; consider the magnitude of the effect and its real-world implications.

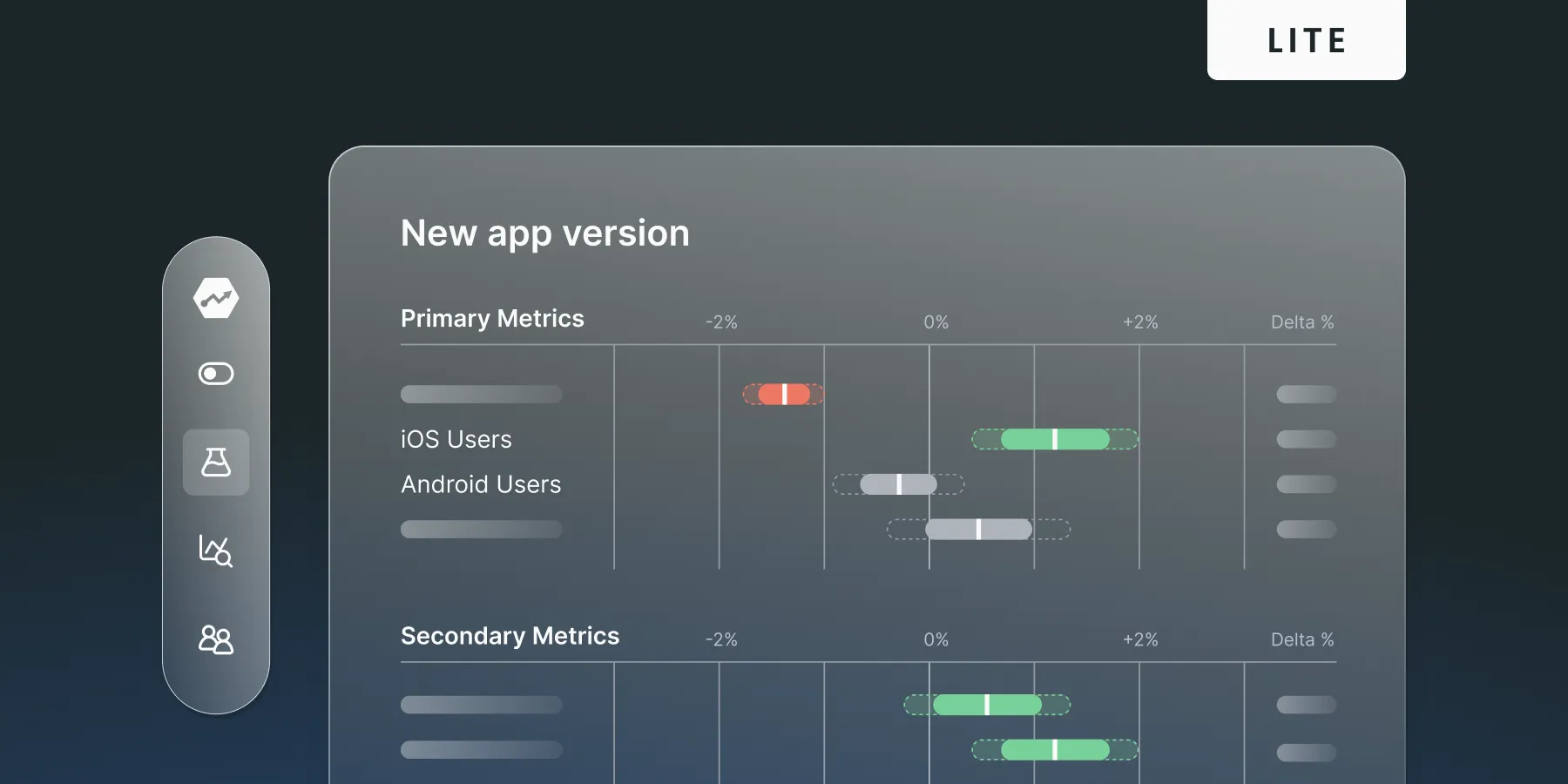

Data visualization is a powerful tool for communicating statistical findings. Use graphs, charts, and tables to present results clearly and concisely. Highlight key takeaways and provide context for your audience.

Interpreting p-values and making decisions

P-values are calculated by comparing observed data to a null hypothesis using statistical tests. The p-value represents the probability of observing results as extreme as those measured, assuming the null hypothesis is true. A low p-value indicates the observed results are unlikely due to random chance alone.

To interpret p-values, compare them to a predetermined significance level (α), typically 0.05. If the p-value is less than or equal to α, reject the null hypothesis and consider the results statistically significant. This suggests the observed effect is likely real and not just due to random chance.

However, it's crucial to avoid common misconceptions when interpreting p-values:

A p-value does not indicate the probability that the null hypothesis is true or false. It only measures the probability of observing the data if the null hypothesis were true.

A statistically significant result (p ≤ α) does not guarantee the effect is practically meaningful or important. Always consider the effect size and real-world implications alongside statistical significance.

Failing to reject the null hypothesis (p > α) does not prove the null hypothesis is true. It only suggests insufficient evidence to reject it based on the observed data.

When testing statistical significance, it's essential to:

Clearly define the null and alternative hypotheses before collecting data.

Choose an appropriate statistical test based on the data type and distribution.

Interpret p-values in the context of the study design, sample size, and potential confounding factors.

Use multiple testing correction methods (e.g., Bonferroni, Benjamini-Hochberg) when conducting numerous hypothesis tests to control the false discovery rate.

By understanding how to calculate and interpret p-values correctly, you can make informed decisions based on statistical evidence. This helps you distinguish genuine effects from random noise in your data, enabling you to confidently implement changes that drive meaningful improvements in your product or business. While statistical significance is crucial, it's not the only factor to consider. Practical relevance is equally important; a statistically significant result may not always translate to meaningful real-world impact. Assess the magnitude of the effect alongside its statistical significance.

When conducting multiple comparisons, the likelihood of false positives increases. To mitigate this, apply multiple comparison corrections such as the Bonferroni correction or the Benjamini-Hochberg procedure. These adjustments help maintain the overall significance level and reduce the risk of false positives.

P-hacking, the practice of manipulating data or analysis methods to achieve statistical significance, undermines the integrity of your results. To avoid p-hacking, preregister your hypotheses, specify your analysis plan in advance, and report all conducted analyses transparently. Adhering to these practices ensures the credibility of your findings.

Sample size plays a critical role in detecting statistically significant differences. When testing for statistical significance, ensure your sample size is adequate to detect meaningful effects. Conducting power analysis can help determine the appropriate sample size for your study.

Confounding variables can distort the relationship between your variables of interest, leading to misleading conclusions. When designing your study and analyzing data, identify and control for potential confounders. Randomization and stratification techniques can help minimize the impact of confounding variables.

Interpreting statistical significance requires caution. A statistically significant result does not necessarily imply causation; it only indicates an association. To establish causality, consider the study design, temporal relationship, and potential alternative explanations. Exercise caution when making causal claims based solely on statistical significance.

Talk to the pros, become a pro