Building modern software can be daunting.

Product managers and engineers are under pressure to push out new and meaningful features on a regular cadence, all while prioritizing a backlog of forward-looking features and must choose the best ideas while filtering out those perceived to have a lower impact.

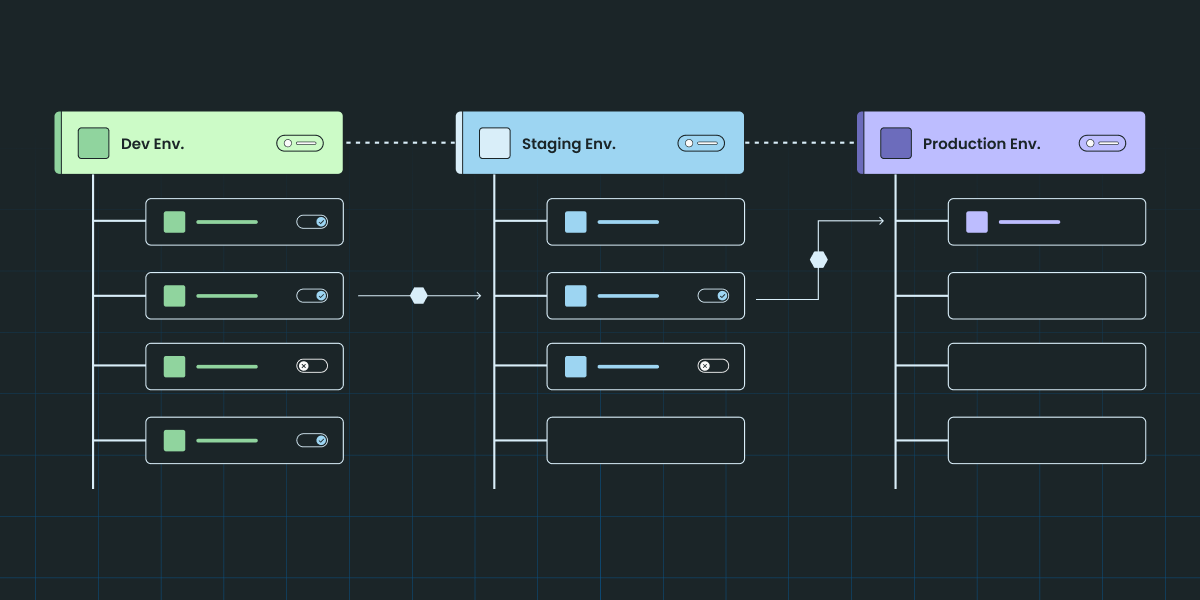

To further complicate things, many new features that your software team implements require code changes that aren’t meant to affect all users or are something that should be partially rolled out so the impact can be tested and risk can be mitigated, further complicating and delaying your product release cycle.

Typically, product teams will enact agile software development practices to address these hurdles and build a tool kit of homegrown and third-party tools to streamline code release cycles, measure feature impact, observe trends over time, and continuously improve their development process to deliver better products.

Without having the proper tools in the kit, agile development processes are greatly hindered and the consequences are reflected in a poor end-user experience. In today’s software development environment, certain paradigms are necessary to compete with accelerated release cycles.

Feature flags

One of the most important software tools agile teams utilize is Feature Flags—otherwise known as feature gates, feature toggles, etc—which enable teams to control how, when, and to whom their feature updates are launched. Rolling out features in a controlled and reversible manner is an expectation in modern software development.

Why are they valuable?

Feature flags create a lot of flexibility for engineers by giving them an easy tool to turn things on and off without constantly changing code. By wrapping certain blocks of code in feature flags, an on/off switch is created.

The rules that determine who sees this wrapped code can then be defined by product/business stakeholders without engineering intervention (or by engineers who are testing new features) using a platform UI, such as the Statsig Console.

By giving teams the ability to show/hide certain features and roll them back/release them quickly, teams can be more agile. They can test quickly in production environments and don’t have to wait on longer code release cycles to release new features.

Furthermore, bad or breaking features can be disabled in seconds/minutes rather than days, reducing the likelihood of core product degradation.

Feature flag example use cases

Roll out new features in a scheduled manner (ex: 2% -> 10% -> 50% -> 100%) in case they fail or have unintended consequences - mitigation of risk.

Enable/disable additional features on the fly as user provisions change

premium user experiences, access to premium features, etc

Enable quick kill-switches for experimental features, measure the impact of experimental features

Server-side use cases

Testing new server code branches which could degrade front-end user performance on internal users first (dogfooding)

Canary deployments of code changes to monitor performance degradation

Client side - Web

Delivering more custom features to segments of users

A video streaming platform might have a different streaming codec for mobile users

Client side - Mobile

Mobile deployments require a user to download the new app, by putting features behind a flag they can be removed/added without a code redeploy

What do feature flags look like?

On the code side, feature flags are simple method calls - statsig.checkGate(”gate_name”) - which return either true or false (aka boolean) values based on a defined set of rules, or conditional statements, stored in the feature flag platform. The true or false value will be used to determine whether or not we show a flag for a provided user.

Should we want to stop a feature from being displayed to a group of users or entirely, we can quickly disable it via an API call, or in the console UI, without having to modify and redeploy code.

i.e. statsig.checkGate() will always return false so no one will see the new features.

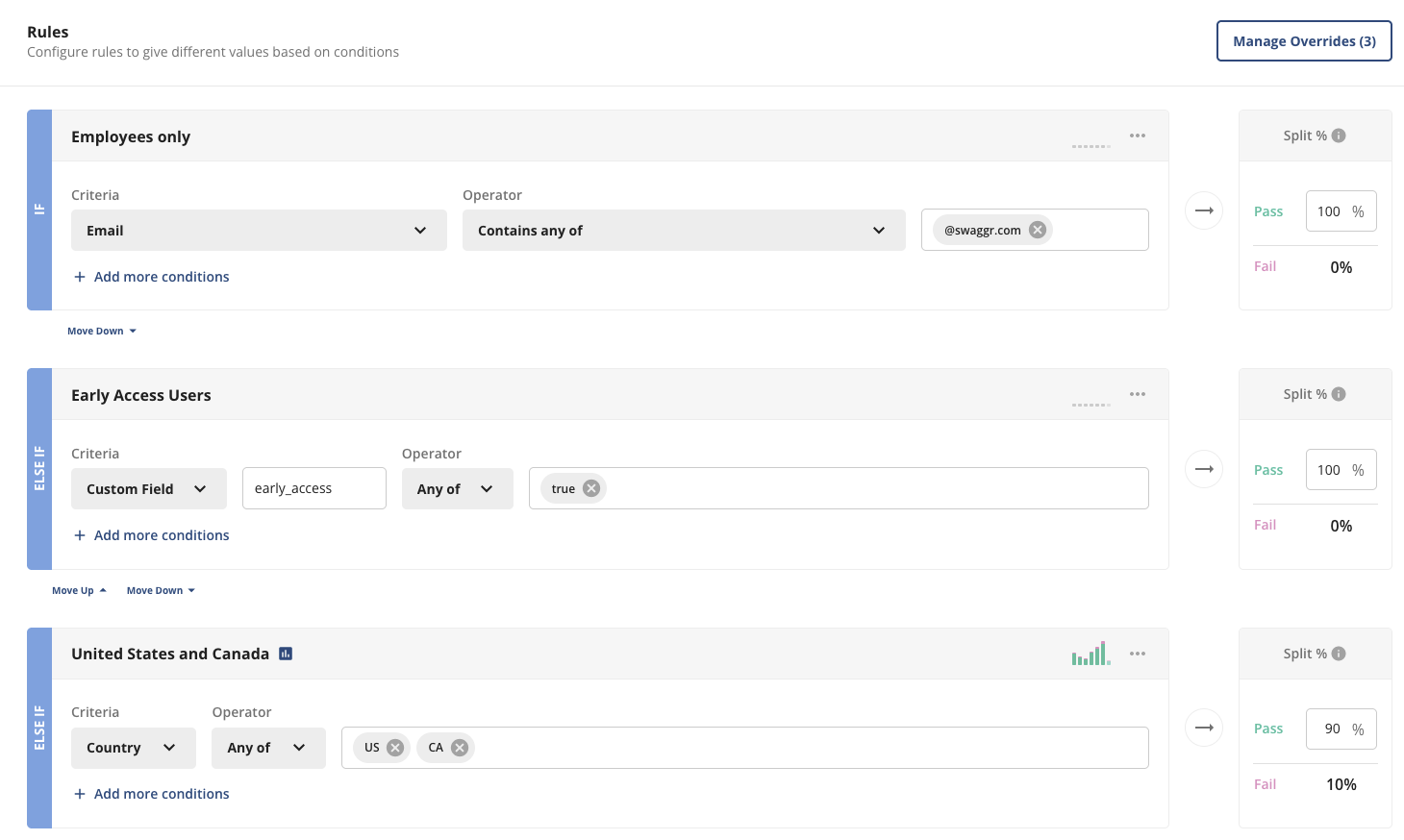

Rules

As mentioned above, rules are conditional statements or criteria used to determine which users qualify for pass/fail treatments. The pass percentage further determines the percentage of qualifying users that will be exposed to the new feature. The remaining users will see the feature disabled.

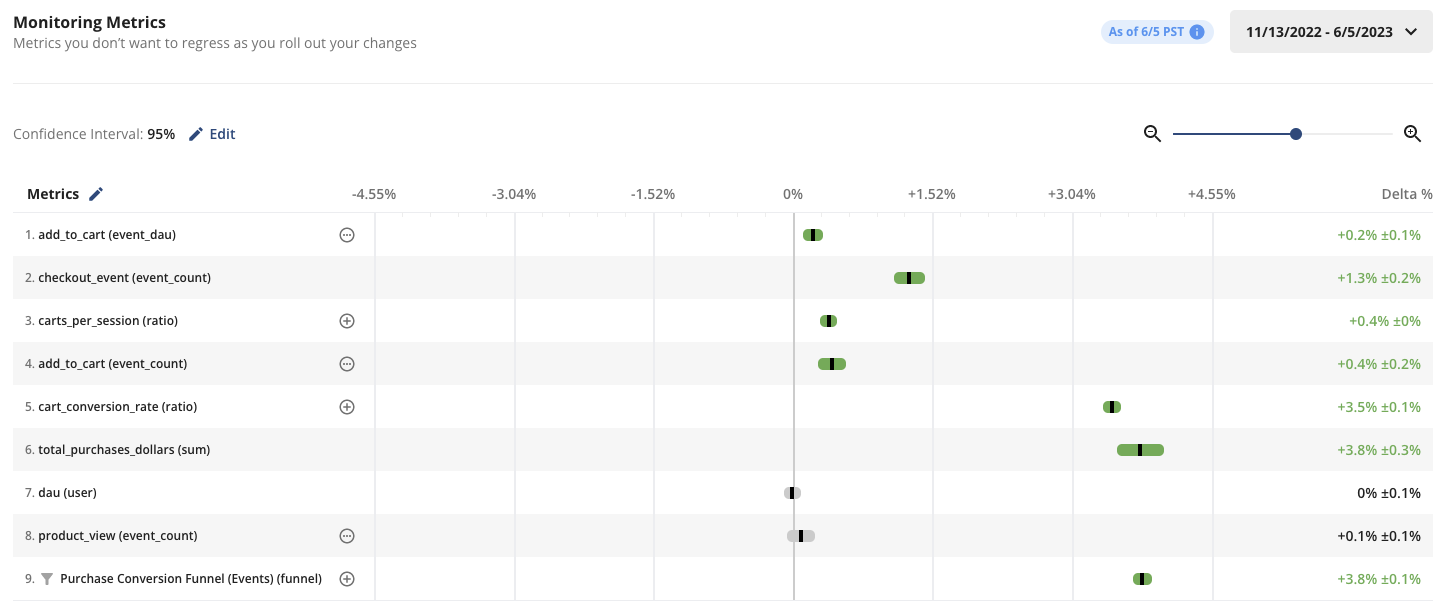

Note: This pass percentage also enables us to run A/B tests, by splitting groups of users into two populations, we can compare and measure the metric impact between those two populations, where the Fail group acts as the control and the Pass acts as the test. In the US/Canada case, we can see a lift in checkout metrics for our new feature (pass) vs. the control (fail):

For homegrown solutions, rules are typically stored as database/cache records, configuration values, or some other quick-access storage format and fetched via an API. As teams grow, managing feature flags with robust capabilities can become a separate project and growing organizations might opt to buy a solution rather than build and maintain one internally.

Join the Slack community

Example rules and their purposes:

Does the user have a certain email?

We have a feature we only want to show internal employees (dogfooding)

We show a targeted pricing page based on the user’s company

Is this user on an Android phone?

We might have a feature that is specific to Android phones, like a screen feature or video player that is optimized for Android

Is this an early-access user?

Early access/beta users will see new features before general access and are more keen to provide feedback

We want to A/B test the impact of a feature, so we split a targeted group to create two test variants to compare

Feature flag considerations

Although features flags are a great tool to enable software teams to move quickly while mitigating risk, decoupling code deployments from releases, and measuring the impact of core metrics, they do add technical complexity to your code, because you are adding additional switches when you implement feature flags.

To this end, Statsig engineer Matt Garnes wrote a great blog post on cleaning up old feature flags and how Statsig has features to streamline this. As with any software development process, you want to have a plan and regular processes around ensuring your code is clean.

Experimentation for small businesses

The TLDR:

Agility is the name of the game in modern software development.

Without the proper developer tools in place, your product release cycle can slow down and the features you do release can have unintended side-effects which regress important core business metrics.

Feature flags are an inexpensive and easy mechanism to not only avoid these issues but unlock modern software engineering flows, take risks in your product choices, and gain an overall improvement product observability.

Request a demo