Ever had this nightmare? You’re asked to compile metrics from three different data sources for a colleague by the end of the day. Easy, right?

Not quite. One data source is unreliable, your data scientists are debating which metric is 'correct,' and your backup plan will take hours to verify the data. Meanwhile, your non-technical colleague can't understand why it's taking so long and wants the data now. You end up spending your evening with Athena, bashing into the terminal to scrape data all while blasting your college all-nighter playlist, hoping the data looks good.

Sound familiar?

Lucky for you, this guide will give an overview of how to form clear governance and standardize the metrics themselves. With governance, this guide will go over who owns the data, who verifies the metrics, and reviews that must be done with any new incoming metrics. From there, an overview on how to standardize the metrics in a central repository.

I'll walk you through building a Metrics Library on Statsig and avoiding the nightmare scenario above. We'll focus on two parts:

Central Definitions & Governance and Flexibility: Establishing clear and consistent metric definitions. Plus ensuring robust governance while allowing flexibility in metric creation.

Access, Lineage, & Accountability: Providing clear access controls and lineage for each metric. And maintaining an audit history for accountability and transparency.

Ready to turn your data nightmares into dreams? Let’s get started!

Prerequisites

Before diving into the guide, ensure you have:

An active Statsig account with the necessary permissions to create and manage metrics.

Familiarity with your organization's data sources and the key performance indicators (KPIs) relevant to your business.

Understanding of the Statsig platform, including its features and functionalities related to metrics.

Overview on building a Metrics Library on Statsig

Part 1: Governance with Flexibility

Access, Lineage, Ownership, and History

The hardest part of an integration is determining the governance structure. It is easy to turn on data connectors and start sending in data (of any quality) into a system. But determining which teams have access to the platform, what roles should be created, who can verify metrics, and what should be the overall review structure - it can be tempting to skip this entirely and just cross your fingers.

Luckily, with Statsig, you can easily create that governance and map out exactly how you want the whole governance structure with your Metrics Library in four easy steps.

Access Control: Implement Role-Based Access Control (RBAC) to manage who can create, edit, or view metrics. This ensures that only authorized personnel can modify metric definitions, maintaining the integrity of your Metrics Library.

It is important to note here, that it is critical to ensure that there are backups within the roles in the case that a key admin goes on vacation. After all, no one wants to have to call their boss for a simple change request!

Determine Lineage and Ownership: Clearly define the ownership of each metric. Use tags to associate metrics with specific teams or business functions, facilitating easier management and accountability.

During this time, it is a good idea to do some spring cleaning on your metrics. Is there one that no longer aligns with your KPI? Is there a metric that is simply useless? Is there a new metric you want to create?

Review Process: Implement a review process for new metrics. While it may be fun to give everyone access to send in whatever data, it can get confusing if one should be using ‘test123’ or ‘test_123’. Easily avoided by implementing a review system for whenever there is a new metric to be sent to Statsig.

Verified Metrics: While you may not be able to get a verified check in your Instagram or X profile… you can at least boast about getting a verified check in Statsig! Establish a set of core, verified metrics that set the standard for reliability and accuracy. Verified metrics are curated by your company and are marked with a verified icon, making it clear which metrics are trustworthy.

Following the four steps above, you’ll be able to establish a clear structure of who owns what data and the review process. If, for whatever reason, you need to determine how a metric was modified over time; know that Statsig maintains a history of changes made to metrics.

Part 2: Central definition of metrics

Centralized Metrics Library

Now that you’ve handled the bureaucracy and planning of your governance, it’s time for the easier of the parts - integration! With Statsig, it’s easy to start sending over metrics, creating custom metrics, and implement our Semantic Layer.

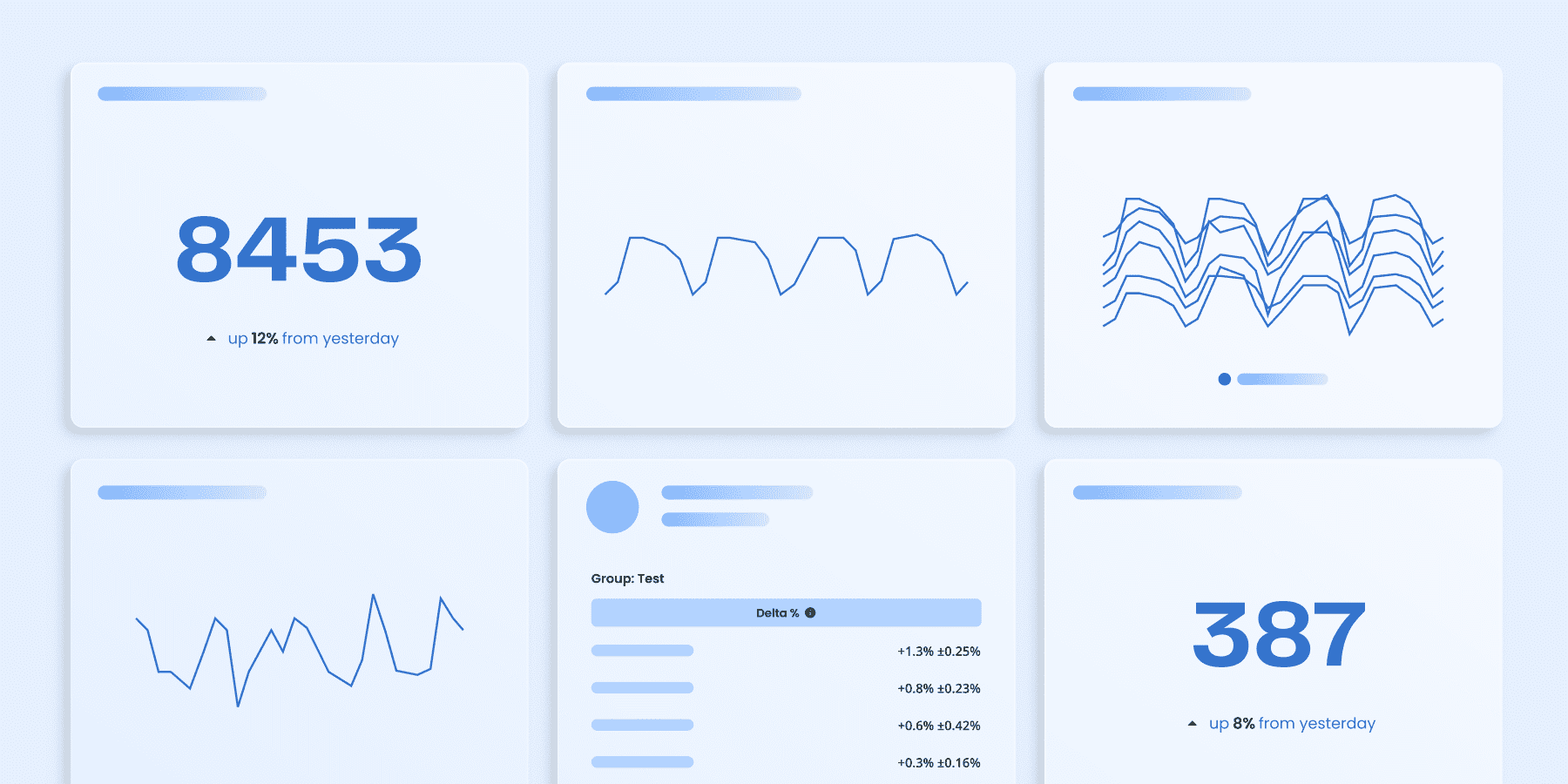

Creating Metrics: Define metrics by combining a metric source, an aggregation, and optional filters and advanced settings. Your Metrics Library should include the core metrics that are critical to measuring your product’s success. These could include daily active users (DAU), conversion rates, retention rates, or any other metric that provides insight into user engagement and business performance.

While earlier it was mentioned about cleaning out metrics… this is another ample opportunity to ensure that you’re tracking the metrics that are important to you, your team, and company. No one wants to repeat history twice!

Custom Metrics: Define custom metrics for more granular analysis or to capture unique aspects of user behavior that are not covered by standard metrics. Custom metrics can be created based on events logged with session information or other specific data points.

Semantic Layer: Utilize the semantic layer to define metrics and metric sources programmatically. This allows for automatic synchronization of allowed sources and columns, ensuring a clean and transparent metric definition process. To read more about the Semantic Layer and how to implement it, read more about it in our documentation.

By implementing these strategies, you can build a robust and flexible Metrics Library on Statsig and curate a solid governance structure, turning your data nightmares into dreams. Take a start by creating your first metric or even sending out an initial meeting request with your team to determine governance!

Get started now!