Ever waited for an app to load and felt like it's taking forever? We've all been there—tapping our fingers, wondering why an app isn't responding as quickly as we'd like. In today's fast-paced world, users expect apps to be swift and seamless.

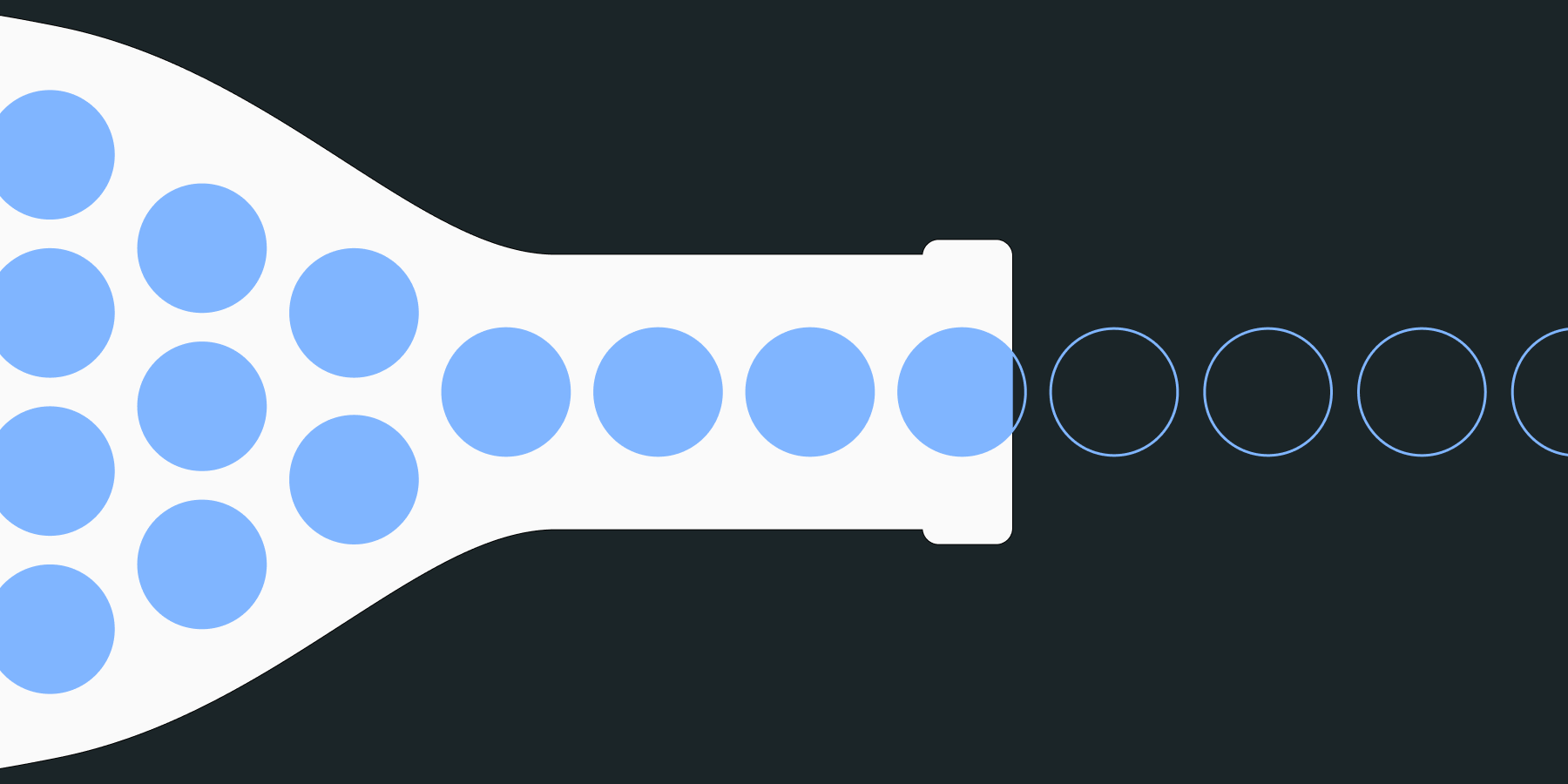

So what's slowing things down? Performance bottlenecks. They're the hidden culprits that can make an otherwise great app feel sluggish. Let's dive into what causes these bottlenecks and how we can tackle them to keep our apps running smoothly.

Understanding performance bottlenecks in app development

Performance bottlenecks are issues that seriously hurt an app's efficiency and user experience. They happen when certain parts of your app hog too many resources, causing slowdowns and lag. Figuring out where these bottlenecks are and fixing them is crucial to maintain smooth and efficient performance.

These bottlenecks can mess with CPU and memory usage, dragging down overall performance. Heavy CPU usage might come from complex algorithms or poor multi-threading, which slows down processing times. Too much memory consumption—maybe due to memory leaks or inefficient data handling—can slow down your app or even make it crash.

And let's not forget the impact on users. Bottlenecks can lead to slow loading times and unresponsive interfaces. Frustrated users might abandon your app, increasing bounce rates and hurting your bottom line. Addressing performance bottlenecks isn't just about the tech—it's about keeping your users happy and staying competitive.

Some common offenders when it comes to performance bottlenecks include slow loading times, high server response times, inefficient database queries, and too many network requests. By pinpointing and optimizing these areas, developers can boost overall app performance, leading to happier users and better business results.

Identifying performance bottlenecks with profiling and testing tools

Using profiling and monitoring tools

Profiling tools are your go-to for finding resource-intensive parts of your app. They give you insights into CPU usage, memory consumption, and execution times. Some popular profiling tools include VisualVM, PyCharm Profiler, and Perf.

Keeping an eye on critical metrics is essential for spotting performance issues. Monitor response times, throughput, and resource utilization. Tools like Dynatrace offer automated root cause analysis and real-time performance monitoring to help you stay on top of things.

Conducting performance testing

Load and stress testing help uncover bottlenecks by simulating real-world usage. Load testing checks how your app performs under normal conditions, while stress testing pushes it to its limits. Tools like Apache JMeter and Gatling make it easier to run these tests.

Setting up performance baselines is a smart move for tracking improvements over time. Baselines give you a reference point to compare future test results. By regularly conducting performance tests and analyzing metrics, you can catch performance issues early.

Addressing common performance bottlenecks

First off, optimizing inefficient database queries is key for speeding up data retrieval. Dig into query execution plans and set up the right indexes to make queries faster. Skip unnecessary joins and use caching to ease the load on your database.

When it comes to reducing CPU usage, code optimization and efficient algorithms are your allies. Use profiling tools to find CPU-heavy tasks and optimize them. Techniques like lazy evaluation, memoization, and parallel processing can help lower CPU overhead.

Be on the lookout for memory leaks—they can cause your app to use excessive memory and crash. Memory profiling tools can help you spot and fix leaks. Make sure you're managing memory properly by releasing unused resources and avoiding unnecessary object creation. Regular monitoring can catch leaks before they become big problems.

Inefficient network communication can add unwanted latency. Cut down on the number of requests and optimize payload sizes. Use caching and content delivery networks (CDNs) to reduce network overhead. Consider compression techniques and protocol optimizations for faster data transfer.

Finally, make it a habit to continuously monitor and optimize your app's performance. Use performance monitoring tools like Statsig to proactively find bottlenecks. Regular performance tests and metric tracking help you see where improvements can be made. Staying up-to-date with best practices and new technologies ensures your app stays ahead of the curve.

Enhancing app performance with server-side testing and continuous optimization

Server-side testing is crucial for fine-tuning backend processes and boosting overall app performance. By running experiments on the server side, developers can identify bottlenecks and tweak their apps for faster responses and better scalability. Server-side testing allows for precise data collection and analysis, making backend optimizations more effective.

Implementing server-side experiments means using feature flags to control feature exposure and gather performance data. Tools like Apache JMeter, Gatling, and Postman can simulate heavy loads and measure how your app performs under different conditions. By analyzing this data, you can pinpoint areas that need improvement and make targeted optimizations.

Continuous monitoring and optimization are essential for keeping your app performing at its best. Platforms like Statsig provide comprehensive monitoring and detailed metrics. They help developers quickly identify and address performance issues. By leveraging these tools, your team can ensure the app consistently delivers a smooth user experience, even as traffic and complexity grow.

Closing thoughts

Tackling performance bottlenecks might seem daunting, but with the right approach and tools, it's definitely doable. Identifying and fixing these issues not only enhances your app's performance but also keeps your users coming back for more. Platforms like Statsig offer powerful solutions for monitoring, experimentation, and optimization to help you along the way.

Keep exploring and learning about performance optimization. There are plenty of resources out there on server-side testing, profiling tools, and best practices for continuous improvement. If you're looking to dive deeper, check out the Statsig blog for more insights and tips. Happy optimizing!