CURE brings powerful, flexible regression adjustment to every Statsig experiment.

Statsig is excited to announce that we’re moving out of beta testing and into full production launch for CURE - an extension of CUPED - which allows users to add arbitrary covariate data to regression adjustment in their experiments, reducing variance even further than existing CUPED implementations.

💡 Related: Check out our blog about the CUPED technique for a refresher

We’ve named this feature variance CURE as a riff on its evolution of “vanilla” CUPED:

CUPED: Controlled [Experiment] Using Pre-Experiment Data

CURE: [Variance] Control Using Regression Estimates

CURE is particularly effective for companies experimenting on new users or other situations where we can’t look at a user’s historical behavior. For example, if users from the US tend to have a higher signup rate, including “Country” as a covariate should reduce your variance when measuring signups in an experiment—meaning you need fewer users to get meaningful results.

For users already using CUPED on Statsig with strong historical user-level data, results will be more moderate. As many teams have written about, pre-experiment data on the same behavior is generally the strongest input to regression adjustment by a large factor. That said, they should expect to see some additional reduction in variance.

Our approach to regression adjustment

There are a number of valid approaches to regression adjustment out in the wild, from Snap’s binning-based approach to CUPAC at Doordash.

We wanted to make sure that we could give Statsig customers the benefits associated with those other methods while avoiding being opaque or impossible to understand what was going on behind the scenes. Because of this, we designed our system to be easy, safe to use, and highly flexible.

Using CURE, you can get the benefits of most of the CUPED approaches. For example:

If you have a predictive model of future behaviors, you can easily use that as a covariate in CURE (like Doordash’s CUPAC)

If you want to use “user binning” to normalize for user behavior and control variance, like Snapchat, you can provide users’ bin value for different user behavior as a categorical covariate

If you want to provide additional signal to the standard CUPAC approach, you can pick and choose different user attributes or behaviors to add to the regression

Highlights:

Arbitrary covariates: We’ll use categorical or numerical fields from your exposure data (e.g. a country logged with the unit’s exposure), but you can also plug in any data from an Entity Property Source. We’ll manage the data quality, like ensuring only pre-experiment data makes it into the regression

Project-level configuration: You can specify sources (country; segmentation model outputs; subscription status) and have them apply as default covariates to all of your experiments. You can also specify specific covariates per experiment

Managed feature selection: The risk of a feature like this is a vendor owning a black box that just “throws data at the wall to see what sticks”. We’ve spent a lot of time building a system that carefully selects and engineers features to prevent collinearity and un-sanitized data from causing arbitrary jitter in your results—issues customers reported when using other systems, and there’s a lot planned to transparently surface the “behind the scenes” of our model

Consistency: Statsig has always prioritized making our results consistent on every surface. You will get CURE on your scorecards, your explore queries (drilldowns, groupbys, etc.), and in power analysis.

We’re confident that we’ve built a best-in-class system for anyone serious about running experiments faster, and we’re eager to get it out to our customers.

Getting started with CURE

Using CURE is simple. To get started, make a new experiment and enable covariates in the advanced settings. Statsig will guess which features are categorical/numerical based on type and cardinality, but you can always override that guess.

If you have a feature store, or user attributes in a dim table, you can easily use those by connecting them to an Entity Property Source. After connecting them, those covariates will be available in the settings menu of any experiment that shares an ID type with that property source.

Once that’s set up, you run your experiment analysis as usual.

The only difference will be the reduced variance. On the scorecard for your experiment, Statsig will surface the results of your regression, the features it selected, and the relative contributions of those features to reducing your variance.

For more information, check out our CURE documentation.

What CURE does

Cure performs a number of steps (alongside numerous health checks) to provide regression analysis of customer experiments. As a high-level summary, there are four major steps in Statsig’s approach:

1. Feature tracking

The first step of CURE is to associate features with users. This is pretty basic; as a full reload starts, or users are added to the experiment in incremental reloads, we’ll track the various features in a long-format table with identifiers and values. Categorical features will use one-hot encoding to track the top identified dimensions.

2. Computation

To run any regression, you need a matrix of variances and covariances between different features and the outcome. This can be trivially computed on any modern computation warehouse, though it requires a fair amount of automated SQL generation.

3. Feature selection and regression

Once the matrix is computed, the aggregated data can be processed in milliseconds on Statsig’s servers. We use a modified lasso regression to minimize collinearity, and have a number of health checks we’ve set up to track potential issues.

4. Adjustments

With the regressions computed, another round of code generation takes the input variables and performs the linear adjustments—once again in SQL—for each unit and metric. These are combined with the original data to create an adjusted value with the same expectation as the original dataset, and equal or lower variance.

Our primary design focus was around keeping this from being a black box and being deliberate about how we approached the regression. This was the guiding principle behind our decision to wait to launch with robust health checks and visibility into regression inputs, so that customers can keep us honest; we wanted to avoid hiding behind expertise or complexity, and accordingly made our methodology and models clear and easy to weigh in on.

What to expect

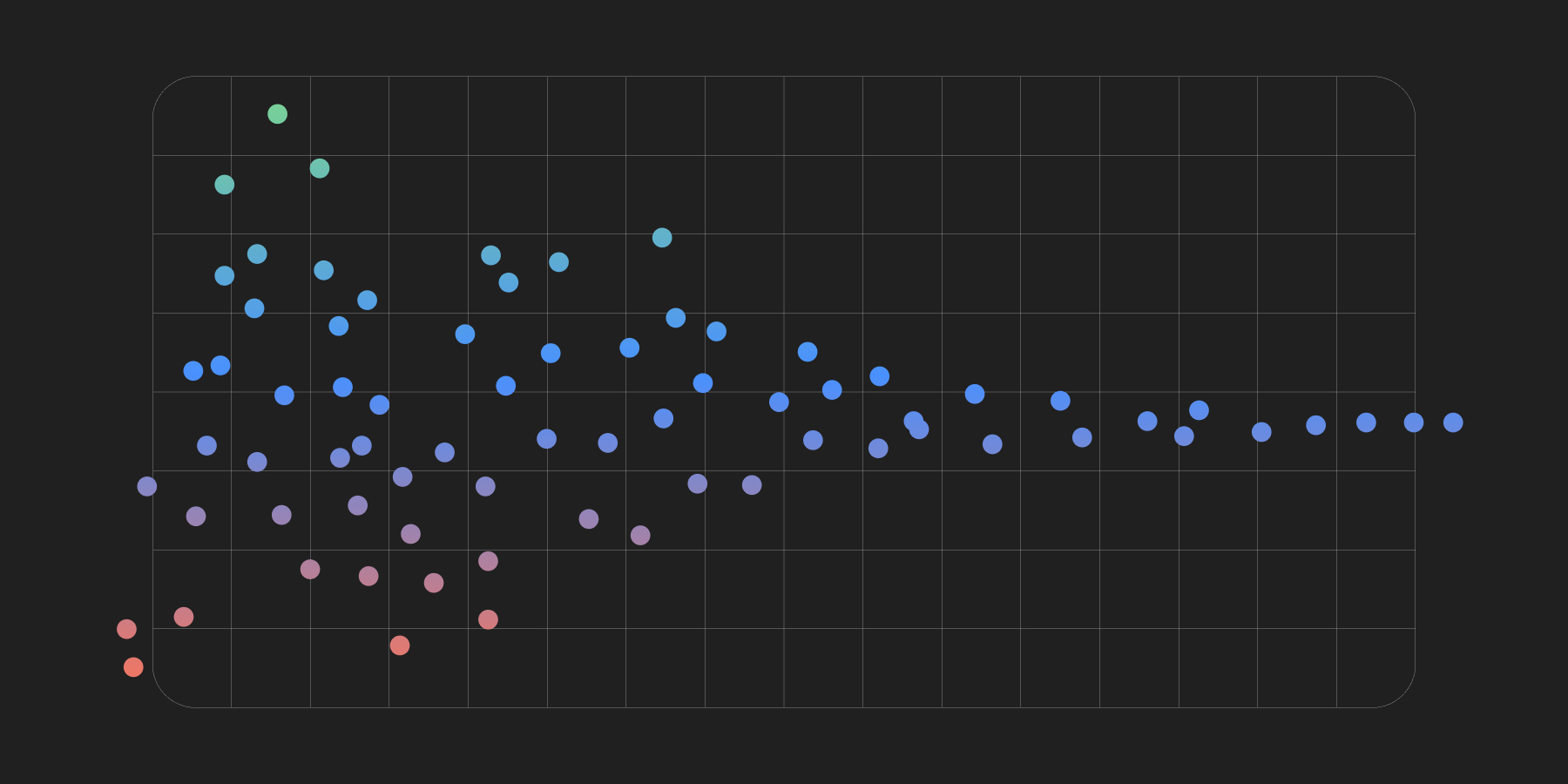

In preliminary testing, most customers saw a 10-20% decrease in experiment runtimes compared to CUPED; they also saw value in the feature importance analysis and being able to understand which factors were predictive of metric behavior.

For customers with no pre-experiment data (e.g. new user experiments), we’ve (so far) seen a 10-40% decrease in experiment runtime.

The examples below were generated during testing with customer experiments, using existing covariates logged with exposures to supplement CUPED with CURE methodology:

| Experiment Profile | Metric Profile | CUPED Variance Reduction | CURE Variance Reduction | Covariates Used |

|---|---|---|---|---|

| Experiment 1: 4 million exposures, AI Product Company | Metric with sparse data | 0.5% | 18.5% | user tenure, region, platform, logged-in/logged-out |

| (Experiment 1) | Metric with high-coverage data | 40.5% | 41.2% | user tenure, region, platform, logged-in/logged-out |

| Experiment 2: 600,000 exposures, food delivery company, new user experiment | Metric with no pre-exposure data | 0% | 16.4% | region, browser, utm |

There’s a range there: At the end of the day, the performance of any regression adjustment system is limited to the power of the regression, so it depends on each customer’s data profile.

The best way is to try it; talk to us to get set up on Statsig today!

Request a demo