We all want to have statistically significant results from our experiments, but even more, we want to be confident that those results are real.

The Benjamini-Hochberg Procedure is now available on Statsig as a way to reduce your false positives.

The more hypotheses we test, the more likely we are to see statistically significant results happen by chance - even with no underlying effect. The Bonferroni Correction and the Benjamini-Hochberg Procedure are different techniques to reduce these false positives when doing multiple comparisons.

The Benjamini-Hochberg Procedure and the Bonferonni Correction both help keep you from launching when you shouldn't. The Benjamini-Hochberg Procedure helps most when there are a large number of hypotheses you’re testing and want a moderate reduction in false positives, whereas the Bonferroni Correction is more conservative and will be most useful when there are a smaller number of hypotheses being tested concurrently.

Bonferroni vs Benjamini-Hochberg

The fundamental tradeoff between no adjustment vs Benjamini-Hocbhberg Procedure vs Bonferroni Correction is your risk tolerance for Type I and Type II errors.

| Null Hypothesis is False | Null Hypothesis is True | |

|---|---|---|

| Reject Null Hypothesis | Correct | Type I Error: False Alarm |

| Fail to Reject Null Hypothesis | Type II Error: Missed Detection | Correct |

A more in depth explanation of Type I and Type II Errors can be found here.

Which is worse?

(Type I Error) I’m making unnecessary changes that don’t actually improve our product.

(Type II Error) I missed an opportunity to make our product better because I didn’t detect a difference in my experiment.

The answer is going to depend on the objective of your team, maturity of your product, and resources that you can devote to implementing and maintaining changes.

If there’s a large number of hypotheses I want to test, then using the Bonferroni Correction can decrease the power of my experiments really quickly. The Benjamini-Hochberg procedure is less severe with penalizing multiple comparisons, but in turn means that your more likely to make type I errors than if you were using the Bonferroni Correction.

The Bonferroni Correction controls Family-Wise Error Rate (FWER) while the Benjamini-Hochberg Procedure controls False Discovery Rate (FDR).

FWER = the probability of making any Type I errors in any of the comparisons

FDR = the probability of a null hypothesis being true when you’ve rejected it

For each metric evaluation of one variant vs the control, we have:

| Null Hypothesis is False | Null Hypothesis is True | |

|---|---|---|

| Reject Null Hypothesis | Type I Error: False Alarm | |

| Fail to Reject Null Hypothesis |

In any online experiment, we’re likely to have more than just 1 metric and one variant in a given experiment, for example:

We generally recommend the Benjamini-Hochberg Procedure as a less severe measure than the Bonferroni Correction, but which still protects you from some amount Type I errors. Whatever methodology you decide to use, you can evaluate your experimentation progragm based on how many changes you shipped based on experiment results, and how those changes impacted your product (holdouts are a great way to do this!) to determine if you need more or less controls to prevent false positives.

Try it with Statsig

Getting started In Statsig

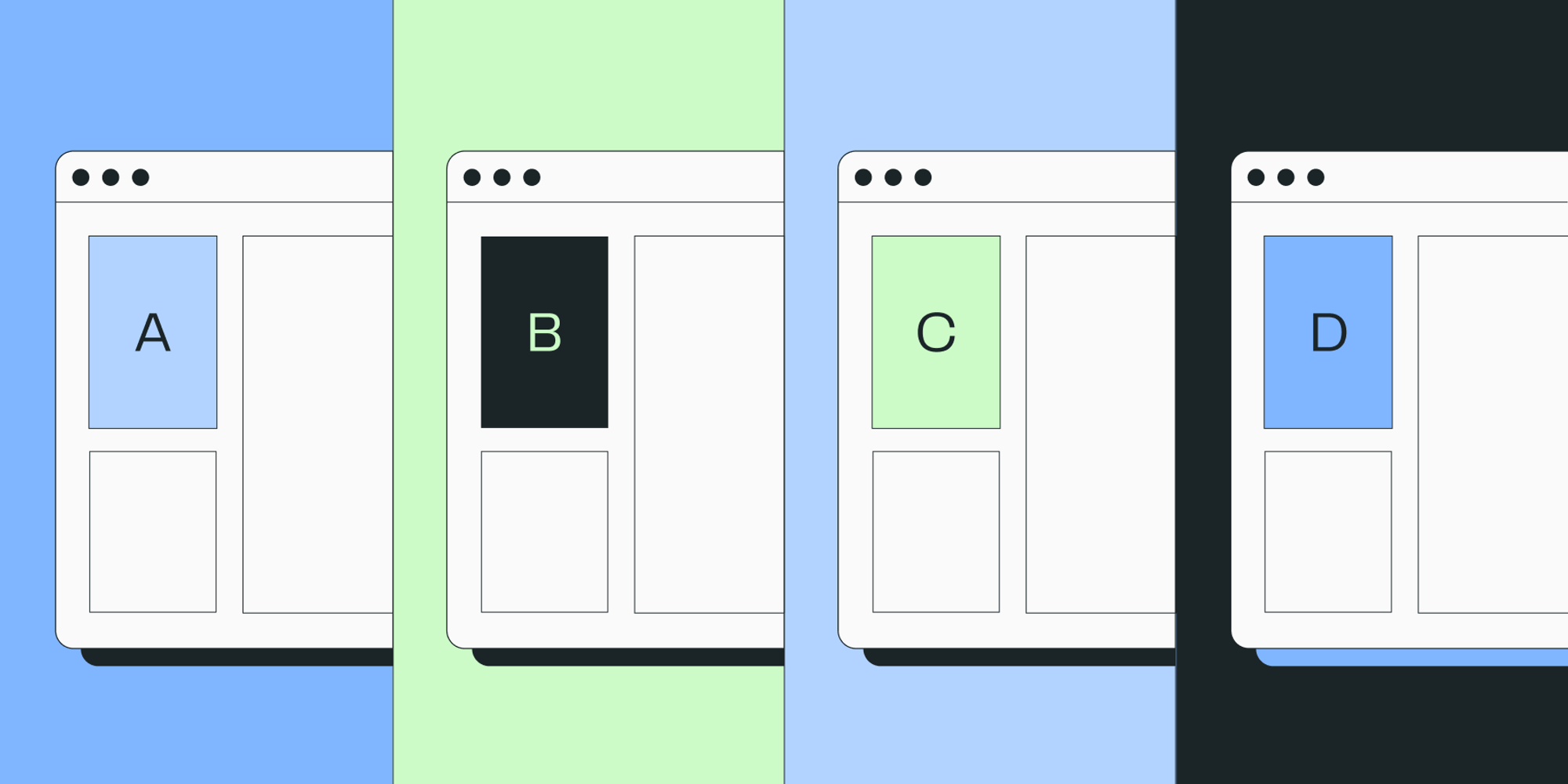

Under the advanced settings of any experiment on Statsig, you can select whether you want to use the Bonferroni Correction or a Benjamini-Hochberg Procedure.

The Benjamini-Hochberg Procedure Setting can be found in the Advanced Settings of the Experiment Settings page right beneath our Bonferroni Correction Settings.

This can also be configured in Experiment Policy for your organization - either enabling it as the default or requiring that it be used.

How do I decide # of metrics vs # of variants vs both?

Like with a Bonferroni Correction, when you apply the Benjamini-Hochberg Procedure in Statsig you can decide whether to apply the method per variant, per metric, or both. This is a decision that experimenters should be making with respect to penalizing distinct hypotheses.

Variants: If you’re using a correction, you should generally apply that correction per variant. Each variant is a distinct treatment for experiment subjects, and represents a distinct hypothesis.

Metrics: Different metrics might both be used as evidence for one hypothesis or each used to support different hypotheses. A good question to ask yourself: Would any of your measured metrics moving in a positive direction mean you want to ship the feature? If so, it’s a good idea to penalize your α for multiple metrics.

Methodology details

Benjamini-Hochberg procedure

We start by sorting p-values in ascending order. We then calculate a threshold of the desired False Discovery Rate divided by the number of comparisons being evaluated multiplied by what rank a p-value is in the ordered list. The largest p-value is less than its threshold value is our new significance level (α), or the smallest threshold value if no p-value is less than its threshold.

For example, if you had the following sorted observed p-values with FDR <= 0.05

| Observed p-value | Possible Thresholds | p-val < Threshold | Is it stat sig? |

|---|---|---|---|

| 0.010 | 0.05/4*1 = 0.0125 | true | yes |

| 0.031 | 0.05/4*2 =0.0250 | false | yes |

| 0.032 | 0.05/4*3 = 0.0375 | true | yes |

| 0.120 | 0.05/4*4 = 0.0500 | false | no |

This means that our adjusted α = 0.0375

Get started now!

Benjamini-Hochberg based on # of metrics, # of variants, and # of metrics and variants

When we apply the Benjamini-Hochberg procedure based on the number of metrics, we are controlling the FDR using the above method for each variant independent of the others. Similarly, when we apply the Benjamini-Hochberg procedure based on the number of variants, we control the FDR using the above method for each metric independent of the others. When we apply the Benjamini-Hochberg procedure based on number of metrics and variants, we control the FDR for the whole experiment by applying the above method for all the p-values for each metric and variant together.

For example, if you had the following observed p-values and are applying Benjamini-Hochberg based on the number of metrics for each variant with FDR ≤ 0.05 we get the following results:

| Variant 1 vs Control | Variant 2 vs Control | |

|---|---|---|

| Metric 1 | 0.043 | 0.129 |

| Metric 2 | 0.049 | 0.074 |

| Metric 3 | 0.042 | 0.005 |

| Metric 4 | 0.037 | 0.042 |

| Adjusted α | 0.05 | 0.0125 |

With the same base data, if we apply Benjamini-Hochberg based on the number of variants for each metric with FDR ≤ 0.05 we get the following results:

| Variant 1 vs Control | Variant 2 vs Control | Adjusted α | |

|---|---|---|---|

| Metric 1 | 0.043 | 0.129 | 0.025 |

| Metric 2 | 0.049 | 0.074 | 0.025 |

| Metric 3 | 0.042 | 0.005 | 0.025 |

| Metric 4 | 0.037 | 0.042 | 0.05 |

And, if we apply Benjamini-Hochberg based the number of variants and metrics with FDR ≤ 0.05 we get the following results:

| Variant 1 vs Control | Variant 2 vs Control | |

|---|---|---|

| Metric 1 | 0.043 | 0.129 |

| Metric 2 | 0.049 | 0.074 |

| Metric 3 | 0.042 | 0.005 |

| Metric 4 | 0.037 | 0.042 |

Adjusted α = 0.00625

Try it with Statsig