If your entire team isn't integrated into the experimentation process, you're missing out on opportunities.

Although tooling, statistical methodologies, and processes for standardizing experimentation are crucial, many companies overlook the importance of integrating the entire team into the experimentation process and committing to scaling up learnings.

While cutting corners upfront might lead to more quick experimentation out of the gate, this subtracts from the ultimate goal of building an effective experimentation culture. Culture means everyone.

At some point, companies need to ask themselves:

How do takeaways from experiments disseminate throughout the organization?

How do teams celebrate and share their wins?

Are failures and learnings celebrated as much as experiments that positively impact metrics?

At the core of much of this is how teams collaborate when experimenting, both within the team and across team/organizational lines.

Ultimately, experimentation is a team sport, and organizations benefit when cross-functional teams can break down silos, have visibility into experimentation data, and collaborate across team lines to drive growth.

Statsig puts collaboration front and center

Statsig's collaboration philosophy is simple: "Every phase of experimentation should be collaborative."

Whether it's inviting colleagues to review your experiment setup before going live, or collaboratively building a source-of-truth experiment summary in the Statsig Console, we've woven collaboration into many aspects of Statsig's core workflows.

Below, we'll highlight a few examples of how Statsig makes collaboration a part of the experimentation process easy:

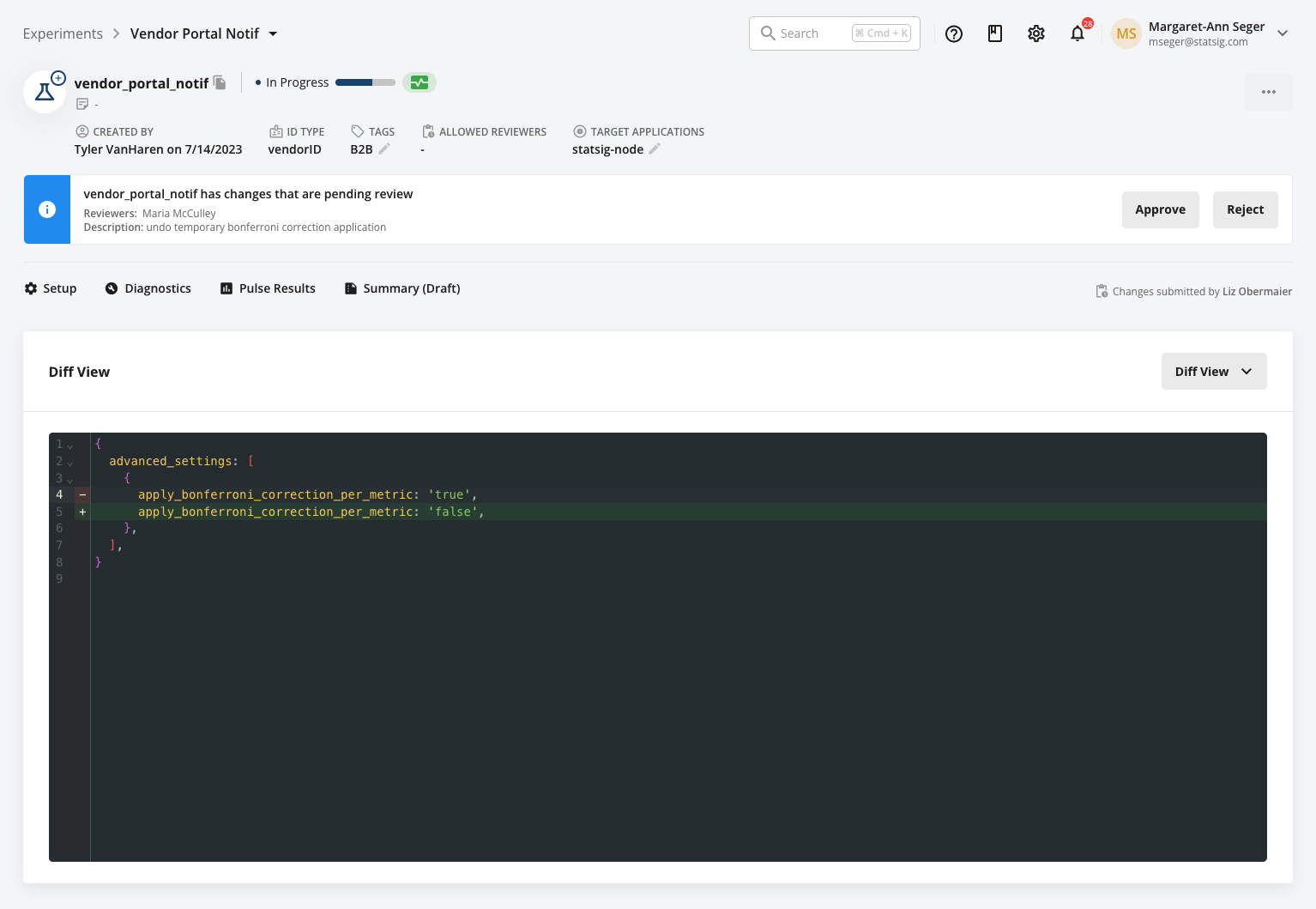

1. Reviews: Bring the whole team into the experiment setup

Statsig offers a review system that enables experiment creators to request feedback and approval from other team members or approved review groups. Reviews can be required for any type of entity change.

Curious? You can find out more about reviews on our reviews documentation page.

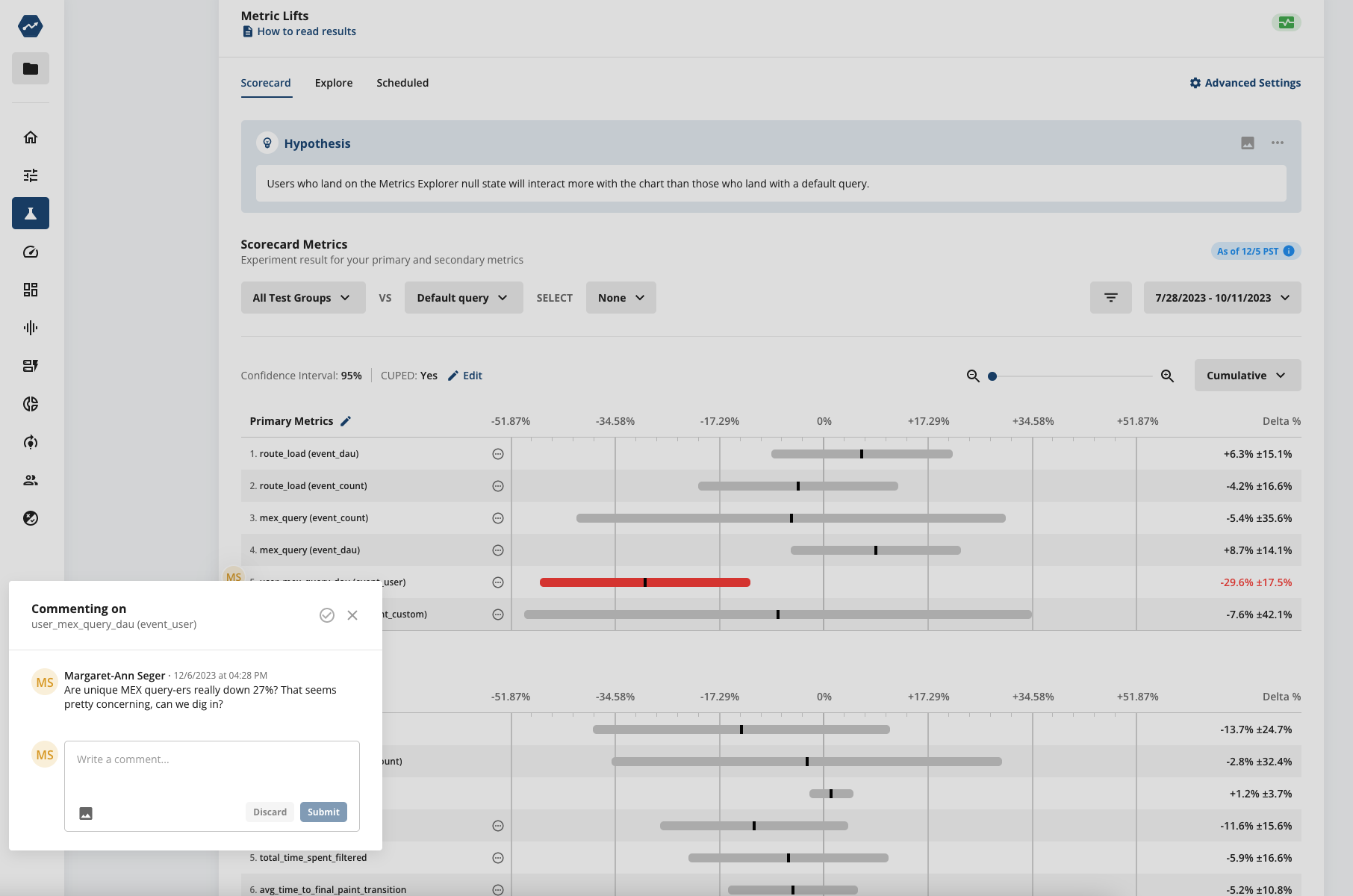

2. Discussions and comments: Enable cross-functional collaboration

Statsig's Discussion Panel enables teammates looking at an experiment for the first time to obtain a complete history of changes and to view and participate in any discussions surrounding the experiment. Team members can leave comments inline within the experiment results to discuss specific metric impacts.

Statsig was purpose-built from day one to ensure every team member can access the platform, so we do not charge based on seats. There is no downside to having the entire team join the platform to participate in the conversation!

Want to learn more? Watch the Statsig Discussions video.

Get a free account

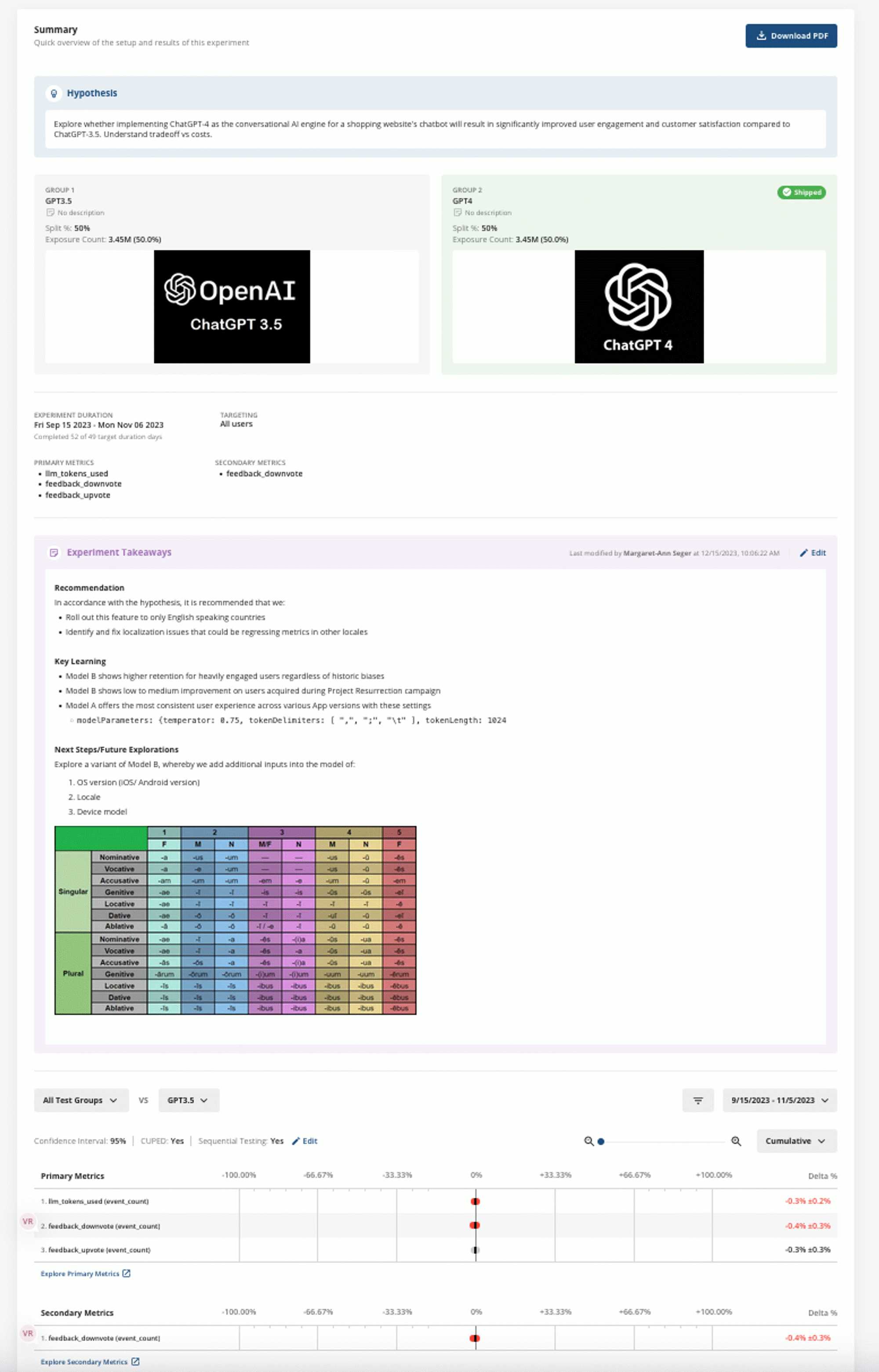

3. Interactive experiment summaries: Ensure experiment learnings stand the test of time

The Experiment Summary tab stores all essential details about an experiment's setup, results, discussions, and action items.

Teams can begin noting observations and learnings in the Summary tab as the experiment progresses. They can then conduct their final experiment review, noting any final takeaways and action items directly in line for posterity.

Finally, you can export your summary as a PDF to share the learnings more broadly throughout the organization.

In our recent fireside chat, Joel Witten, Head of Data at Rec Room, shared how they have instituted weekly experiment review meetings. Everyone in the organization who has conducted an A/B test is encouraged to attend these meetings to present their findings and engage in discussions.

Joel highlighted the widespread interest in learning about experimentation outcomes. He emphasized that counter-intuitive results are more common than expected, often leading to spirited discussions in the review forum. This culture of shared learning and enthusiasm drives the continuous cycle of testing and growth.

Scale the culture with Statsig

When we see companies transform their experimentation—whether it is Notion, which increased experimentation by 30x, or Rec Room, which went from near-zero to 150 experiments annually—it's not because of a single groundbreaking experiment or the genius of an individual.

Companies using Statsig grow because of a mindset to test every rollout, learn from their impact on metrics, and facilitate daily collaboration among cross-functional teams. That is what inspires us to build such collaboration features into the product.

Statsig customers can immediately leverage all of the above features to accelerate learning and make organization-wide experimentation and data-driven decision-making a reality.

Read our customer stories