Experimentation is a powerful tool, and while it’s very easy to do, it’s also very easy to mess up.

This is why health checks are a critical part of an experimentation platform—the more you’re proactively alerted about potential issues, the less likely you are to make a bad ship decision—and worse (in this case), have a bad learning experience.

The homepage experiment

I was pretty sure that the copy on the Statsig homepage was a little clunky and decided to sneak in a test. This was an evenly split a/b/c test that experimented with different CTA texts.

I peeked after a few days. I applied sequential testing, and the results had a high observed lift, but without enough power to be statistically significant. My initial takeaway was that we needed to run this for longer.

The issue

Had I waited and let this run, we would have likely ended up seeing a “Statistically Significant, Positive Result” for these simple conversion metrics. I now know that these results were actually driven by bad input data.

I caught this because Statsig automatically checked for outliers influencing my results. In this case, when we had set up our homepage project, we set up our click metric without winsorization. This was causing issues since a few outlier users dominated the metric value.

Following up

I was able to quickly use Metrics Explorer to confirm my hypothesis about what had happened here. Using the “group by experiment” functionality, it was easy to see that, on different days, two of the branches had a huge number of clicks.

Drilling into this by stable ID, I identified the two users who caused the erroneous data and ended up running an explore query to filter these users out.

Solutions

There were a few ways to approach this problem:

Change/Add winsorization to manage the influence of these outlier users, or add metric caps to a reasonable number like 5 signup clicks/day

Use an explore query or qualifying event filter to eliminate these two users from the analysis

Use an event-user metric instead

Use Statsig’s recently released Bot Detection

In this case, I chose to make a filter query since these users had odd behavior across a number of metrics, and I was confident that their behavior wasn’t caused by the experiment arms. This allowed us to run this experiment and get results that weren’t tainted by the bad users—you can check out our website to see the current variant.

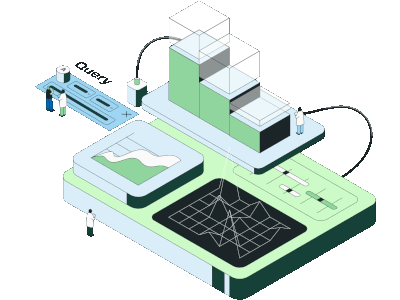

Introducing Product Analytics

Takeaways

At Statsig, we use our own product for internal infra experimentation as well as client-side releases and homepage web optimization. This has really helped us in looking for opportunities—like this one—to prevent issues that users might run into.

It’s also been a big factor in our investment into analytics—in particular, being able to analyze root causes of your experiment results is an incredibly powerful tool for understanding “what happened under the hood.”

Get started now!