At Statsig, we’re constantly finding ways to drive down what we call config propagation latency.

Propagation latency is defined as the time it takes for a change made in the Console to be reflected by the config checks you issue on your frontend or backend systems with our SDKs.

Recently, we made a significant improvement to how we generate the config definitions consumed by Server SDKs when using Target Apps. We optimized this process by pre-filtering configurations based on their relevance to the target app before using them to generate the config definitions.

The result? Faster Node.js event loop times in our internal definition generation service and improved performance for companies adopting target apps.

What are target apps?

Target apps are an advanced feature that helps manage which configurations are delivered to specific SDK instances or services.

By leveraging this feature, teams can ensure that only the relevant gates, experiments, and configs are sent to their apps, improving both performance and security. This feature is essential for companies operating at scale that have multiple apps and services that rely on different configurations.

The benefits of using target apps are very straightforward:

Performance: By filtering out irrelevant configurations, the payload sent to each SDK instance is smaller, leading to faster initialization times and lower memory usage

Security: This approach prevents sensitive configurations, such as client-side gates and experiments, from being exposed to inappropriate SDK instances (e.g., client keys that can be easily extracted from an app).

The change

The optimization is centered around a new in-memory cache that pre-filters configurations based on the target app. Here's how it works:

When generating configurations for an app, we now build and reference a mapping between configurations and target apps. If a configuration is irrelevant to the target app, it’s excluded from the configs considered when generating the payload.

This process builds a dependency graph of entities that are referenced by a configuration, ensuring that all relevant entities (e.g., gates, dynamic configs, experiments) are included. If you tag an experiment with a target app, and that experiment is in a holdout or uses a targeting gate, they need to be included in the dependency graph for that Target App.

The in-memory store tracks configurations and their relationships to target apps, ensuring only the necessary configurations are retrieved and delivered.

The impact

One of our major customers utilizes this "target app" feature extensively, correctly tagging many of their 16,000 (!) configs to specific apps and services.

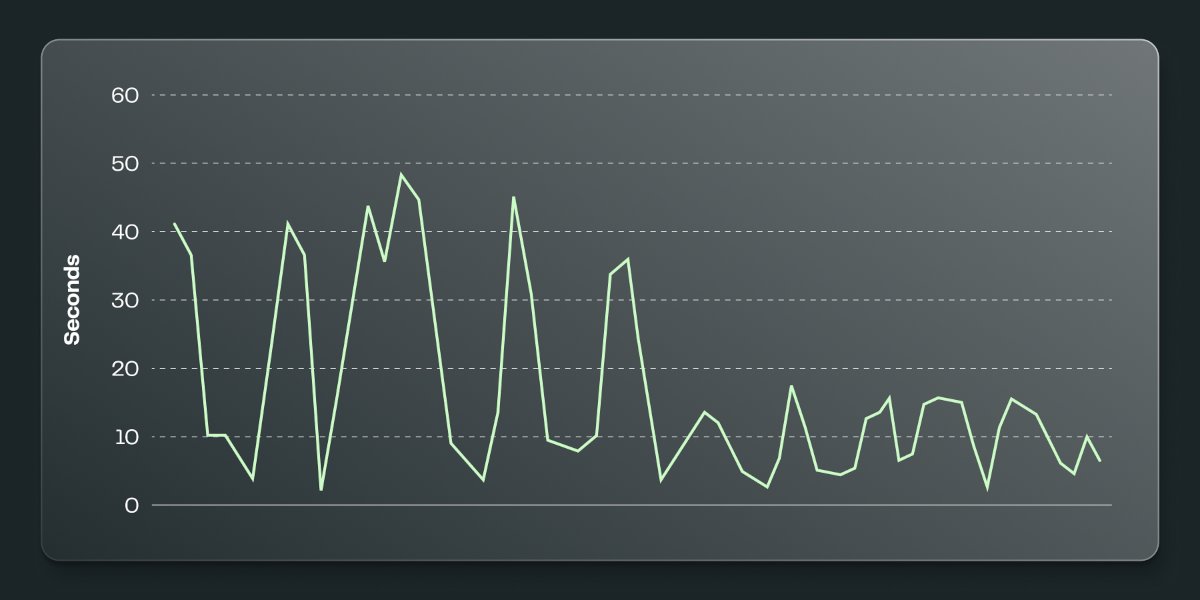

Before this optimization, generating their config payload took around 30 seconds (measured roughly by our internal service’s Node.js event loop time). Now, thanks to these optimizations, we’ve reduced the event loop time by 66%, bringing it down to under 10 seconds.

This performance boost directly translates to lower config propagation latency, meaning configuration changes reach their intended targets faster, improving their overall system responsiveness.

Target apps are a powerful tool for optimizing configuration delivery in large-scale environments. Whether you’re focused on performance, security, or both, leveraging target apps correctly can make a significant impact on your system’s efficiency.

As your integration with Statsig scales, consider adopting target apps to improve performance.

Request a demo