How to pick metrics that make or break your experiments (including do's and don'ts)

Your experiments are only as good as the metrics you choose.

In fact, the wrong metrics can not only mislead your results but can also derail your entire strategy. If you’re not deliberate in selecting the right metrics, you might get taken in the wrong direction. I cover the top 3 steps to selecting your metrics, plus some at-a-glance do's and don't's!

1. Hypothesis first, metrics second

Every successful experiment hinges on a clear, well-defined hypothesis. But here’s the harsh truth: if your metrics don’t directly tie into that hypothesis, your experiment can fail.

Pinpoint the immediate impact: What’s the first, undeniable signal that your experiment is working? This is your primary metric—the one that captures the direct, mechanical change you’re driving. Get this wrong, and everything else falls apart.

Connect to the bigger picture: Your experiment isn’t just about shifting numbers—it’s about achieving a meaningful business outcome. If you can’t link your primary metric to a tangible business goal, stop and reassess. A metric that doesn’t serve your broader objectives is worse than useless—it’s dangerous.

2. Don’t settle for surface-level insights

Even if you nail your primary metrics, your job isn’t done. If you’re not thinking about the broader implications of your experiment, you’re setting yourself up for a rude awakening.

Preempt the pitfalls: Every change you make comes with potential downsides. If you’re not proactively tracking counter-metrics to catch these negative effects, you’re flying blind. And in the world of experimentation, blind decisions lead to disaster.

Dig deeper with explanatory metrics: Your primary metric might show a result, but if you don’t understand the underlying drivers, you’re missing the point. Secondary metrics aren’t optional—they’re essential for truly understanding what’s happening beneath the surface.

Seize every insight: Experiments are more than just a pass/fail test. They’re an opportunity to learn—about your users, your product, and your business. If you’re not extracting every possible insight from your data, you’re wasting one of the most powerful tools at your disposal.

3. Sanity check or risk everything

Before you declare victory, take a step back and make sure your metrics actually make sense. This isn’t a formality—it’s your last line of defense against making a bad decision based on faulty assumptions.

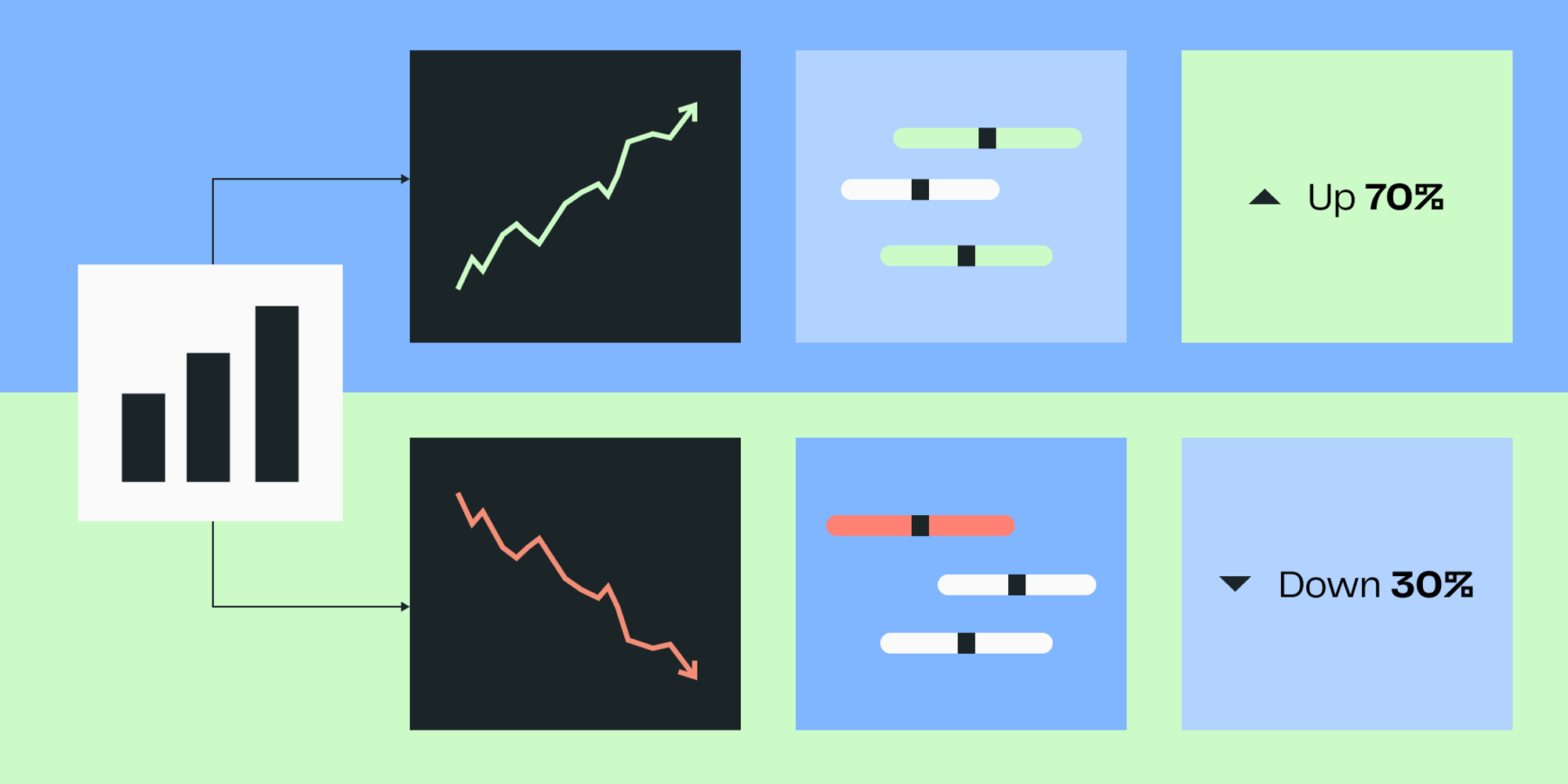

The dos and don’ts of metric selection

Do:

Have a razor-sharp focus on one primary behavioral metric and a clearly aligned business metric.

Anticipate and measure the negative consequences of your changes—because they’re inevitable.

Use secondary metrics to fill in the gaps in your understanding. Without them, you’re operating in the dark.

Ensure your experiment has enough power to provide conclusive, reliable results. Anything less is a waste of time.

Don’t:

Stick with the same business metric for every experiment. If it doesn’t align with your specific goals, it’s irrelevant.

Overcomplicate your analysis with a laundry list of metrics. Clarity and focus are your allies; distraction is your enemy.

Over-interpret secondary data. If it’s not part of your primary hypothesis, it’s noise—don’t let it lead you astray.

Here’s the truth: if you’re not intentional and ruthless about the metrics you choose, your experiments could lead you to the wrong conclusions—and that could be the beginning of the end for your strategy.

Get started now!