As an outsider to the SEO world, I'd always seen it as a world of convention: add your meta descriptions, set your canonicals, write something novel, and the traffic will come.

It's always tempting to accept simplifying explanations of how any system works, but running SEO that way goes against a fundamental value at Statsig: Don't mistake motion for progress.

Certainly, all of the above (metas, canonicals, novel content) are best practices—but if they're well-known principles, can they really set you apart, and make SEO a differentiator for your business? The idea of A/B testing SEO is a bit scary. You're interacting with an opaque external force (the all-knowing algorithm), that is the judge, jury, and executioner of your content's success.

How can the algorithm—and its many rules—allow you to AB test like you normally would?

This is a difficult tension, but a resolvable one. With careful experimental design and world-class tools, we can play by the algorithm's rules and get powerful, data-driven signal on the changes we're making, and make SEO A/B testing not just possible, but trivial.

Challenges of SEO A/B testing

SEO experiments take a common form, but it can be helpful to discuss the constraints of SEO AB testing, to understand why we have to design them a certain way.

First, and perhaps obviously, we have to follow the rules of the algorithm.

Given that the algorithm reindexes content periodically, we can't expect changes that we make to be picked up by Google on a short timescale. Most A/B tests happen per-user—where users one and two are handed variants A and B—and their metrics are measured over time to evaluate the superior variant.

We can't do that here. Given the vast majority of experiments we run targeting SEO will take days or longer to take effect, we can't give Googlebot a different variant each time, or our results will be totally garbled.

We need to randomize our experiences by something non-user-based. The traditional approach in a situation like this would be to choose a Switchback test, which gives a different effect each day or week, measuring over time the effect based on which treatment was shown that week.

While this works great in other situations, this isn't a great match for SEO AB testing, as the ranking of content grows over time, and changes interact heavily with ranking. Thus, doing switchback testing on any sort of reasonable timeframe isn't possible.

A better solution is randomizing tests by URL, making changes to only some of the pages on your website, and measuring their performance on those pages in response to that treatment over time.

For example, you have hundreds of blogs, and you'd like to run an experiment on them:

On the surface, this solution corrects for all of the problems we illustrated above, but it also comes with its own issues we should be mindful of.

To name a few:

Some websites don't have a large number of pages to experiment with which creates all sorts of challenges, the foremost being that it's difficult to craft test and control group allocations with even distributions of metrics across the two of them.

You have to choose experiments that can be applied across pages, and that you'd expect to have a similar impact on each of the pages you'd apply it to.

Designing your experiment

We've decided on our high-level experimental setup (randomizing the experience per-page), and now have to decide what we'll experiment on, and how we'll create buckets of pages.

What you experiment on should be something that can scale across many pages and has the potential to impact SEO meaningfully. Here are a few examples of common SEO A/B tests:

Page title changes, e.g. removing your company branding from product detail page titles.

Image optimizations, such as enabling lazy loading across all pages.

Multimedia enhancements, like adding audio versions of blog posts to see if this boosts engagement or traffic.

Each of these tests can be applied to a broad set of pages, and if they drive impact, you'd expect it to generalize across the site.

These tests might seem simplistic, but they're shockingly powerful. Searchpilot maintains a database of SEO AB tests, with simple tests like branding changes in title tags resulting in double-digit percent uplifts.

Next, you have to create buckets of your pages, across test and control. If you have enough pages to run a proper AB test, then you're going to want to automate this part. You can have an engineer implement simple code that calls an experimentation system's experiment assignment APIs, and only assign the changes to the test group.

This is a best practice approach for its scalability and simplicity, and while we think we do it as well as anyone, Statsig isn't the only vendor capable of offering this functionality and many platforms could work for this.

If you've done all of the above, you're close to set! Outside of some lingering problems, like getting the group distributions right (which we'll discuss below), you're ready to launch your first SEO AB test.

Sidecar no-code A/B testing

The right tools for the job

We've simplified a bit above, for sake of explaining how simple and powerful SEO AB tests can be. Truth is, you'll still need some flexibility from your tools to get this job done.

You'll need to be able to:

1. Randomize on any variable

As we mentioned above, AB testing on user or time simply won't work here. The last thing you want to do when approaching SEO A/B testing is tie yourself to a tool that centers its randomization and variant presentation on user-only identifiers, like userID.

Statsig lets you define any variable type as an ID in your project, enabling you to use it to randomize the experience consistently for any variable.

2. Run in any environment

You're not going to change where your website is hosted to enable your SEO A/B testing strategy. Statsig offers SDKs in 30+ languages, including support for every major frontend and server framework, plus cached content on your CDN, and integrations with tools like Contentful.

3. Sit together with all your other experiments

We have yet to see many companies hire a dedicated team for only SEO A/B testing: usually some entrepreneurial digital marketers will hear of the idea, and set off to try it out.

If that's the case, it's probable that SEO A/B tests aren't the only kind of experiments you're running, and Statsig lets you have them all in one platform, including product-side experiments, geotests, and experiments setup with a visual editor.

4. The big one: the ability to fix bad bucketing

When you're SEO AB testing, it can be pretty easy to accidentally run a bad experiment. Take, for example, a situation where your one killer product or your best blog generates 40% of your pageviews. Whichever bucket that blog ends up in will undoubtedly be the bucket that wins your experiment, unless you're able to control for that.

First of all, Statsig won't let you run a bad experiment. If you accidentally started an experiment with the 40% blog in one bucket and normal randomization, then you'd immediately get warnings about pre-experimental bias existing in your experiment.

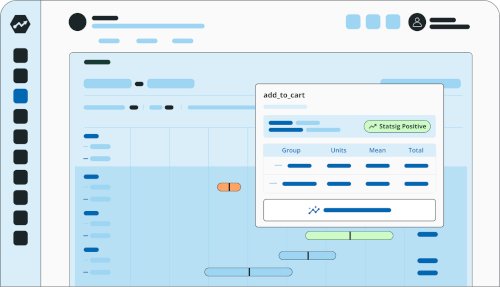

Secondly, we have tools to fix the problem. Stratified Sampling is a method by which we try a handful of different experiment setups to be sure that we get even metric values in test and control—with a fully automated tool in our UI.

We also have tools like CUPED that will control for values that we can see before the experiment, avoiding the worst of the bias and making your experiments run faster.

Case study: Shorter blog titles

At Statsig, we compulsively test whatever we reasonably can.

We're currently exploring the possibility of removing branding from our blog post titles using an SEO AB test. With our website, which runs on a Node.js Express.js server, the change to start the experiment was as simple as adding the URL of each page to the User context in the SDK, and adding a couple experiment checks at call sites.

As of April 11, the experiment is halfway through its duration and looking promising (yes, we peeked!)

Check back in later for the results.

Request a demo