Building great products is not easy.

Getting product market fit is even harder. This usually requires multiple iterations based on product feedback to get it right. And given the choice it’s always better to gather this feedback from a small set of users rather than a 100% “YOLO” launch. At a minimum you want to make sure that new features don’t harm product metrics.

Traditionally, companies collected early feedback from volunteer users with design mocks in a process known as Qualitative Research. This works for the most part but has a couple drawbacks.

This process is expensive and time-consuming

Asking users for feedback doesn’t always result in the right insights

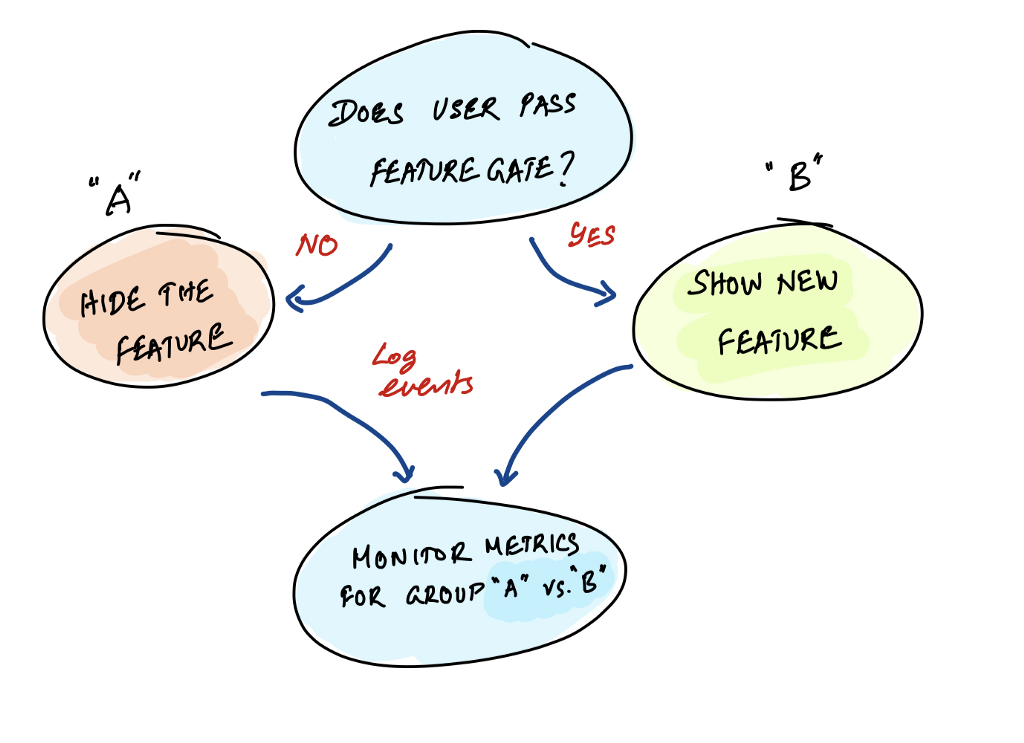

So, what do modern product teams do to validate new product features? They are regularly launching and testing new features in production. They do this safely with development tools called “feature gates” or “feature flags”. Using feature gates, teams can open up access to a brand-new feature for just a small portion of the user-base — this could be 5% of all users, 10% of just English-speaking users, or 15% of users that have previously used a similar feature. This allows teams to monitor relevant product metrics for just the set of people that have access to these features. And if those product metrics look good, then they know that the feature is good and ready for a broader launch.

This model of feature development has the additional benefit of decoupling teams from each other, so partially developed features can still go through a release cycle safely hidden behind a feature gate, without blocking other teams.

Ready to experiment with (pardon the pun) feature gates? Checkout https://www.statsig.com