One of our customers recently asked: “When should we use a feature gate?”

A simple question, but I realized right away that each of us would probably answer it slightly differently.

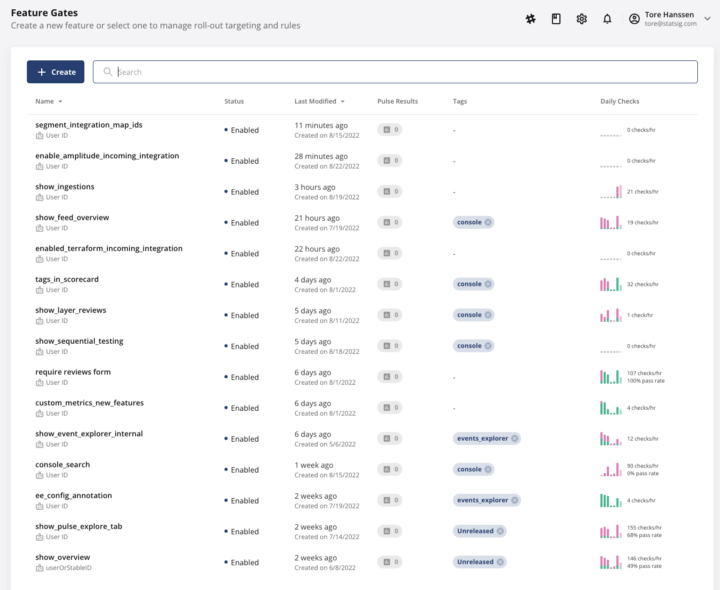

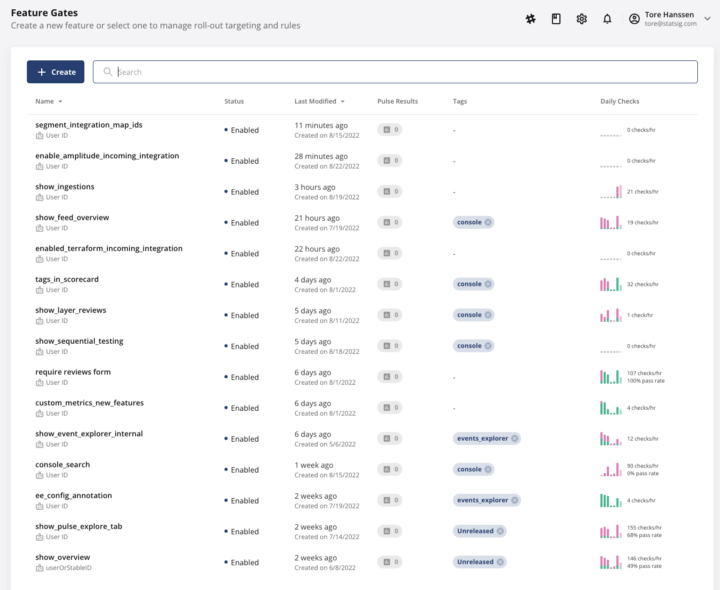

Statsig’s Own Development Flow

In general, our development flow favors adding a feature gate for every new feature. Each feature will be actively worked on behind a gate which is only enabled for the engineers, designers, and PMs who are working on it.

When the feature is ready for a broader audience, it is moved into the “dogfooding” state, where all employees at Statsig have access. After addressing all internal dogfooding feedback/bug fixes, we will open that gate to the target audience (typically a handful of customers, and eventually all users).

With this flow, employees are always exposed to the bleeding edge of features on statsig.com. This helps us catch most strange side-effects and unintended gaps or overlaps between features. But on the reverse side of this is a set of employees who choose to opt-out of new features, even when they are “dogfooding.”

Ensuring Stability

Any employees who spend time demoing our product externally prefer to have the stable, external perspective, and not be exposed to pre-release features. For this purpose, we created the “Employees who Demo” segment.

We also enable or disable features based on development environment versus production to prevent unreleased features from breaking production versions of the Statsig console.

This is a general workflow that will likely work quite well for most companies. There are some other scenarios you might use a feature gate — and even philosophical debates about them. Borrow what you will from the following philosophies of feature gating.

The “Always Feature Gate” Philosophy

Some people believe you should always use a Feature Gate. And when I say always, I mean always. In this school of thought, of course you should use a Feature Gate for big new features or risky changes. But you should also use one even though it might add significant overhead to just set up the split in experiences. And, yes, you should use one even if the change you are making is a bug fix.

Even for a bug fix, you say?

Rolling out a bug fix with a Feature Gate and measuring the impact of that change can be useful, but it does expose end users to known bugs for longer periods of time in the name of measurement.

If you choose to go this route, you definitely want to expand the rollout and get the fix out to everyone as quickly as possible.

Long-Term Holdouts

Another controversial usage is for a long-term holdout. Facebook was famous for this: Even huge new features like Ads in Newsfeed or Marketplace were never available for a small set of users, for the sake of measuring and understanding the long-term impact of having these features on Facebook.

You read that right—some lucky, small percentage of Facebook users never saw ads, even for years after Facebook was wholly dependent on its Ads business for revenue and growth. Facebook even allows advertisers to run these types of tests, comparing a holdout group of users who never saw your ads to those who did.

There were long internal debates about these holdouts, mostly around their target duration: Holdouts can be incredibly useful to measure longer-term impact, but when they stretch beyond a year, it can be disingenuous to the unlucky users who don’t get access to the latest and greatest features.

Get a free account

In Conclusion

Take some inspiration from each of these approaches and form your own opinion about when to use Feature Gates.

The working model for your company likely draws from each of the ideas above. If you only use a feature gate when you think it’s a critical or big change, you will miss cases where a new feature has an unintended side effect.

Related: Vineeth explores a great example of how shipping a “no-brainer” feature behind a feature gate can help you uncover unintended consequences.

On the other hand, if you try to add a feature gate for every bug fix, the number of potential experiences can grow, further increasing the surface area you support, and making it more difficult to reason about the product experience. You’ll also be exposing some people to known bugs while you are running a test!

Join the Slack community