Staying competitive in eCommerce requires a keen understanding of customer behavior, customer expectations, and fine-tuning of your pricing strategy.

“Pricing experiments,” once considered a tactic available only to the major online merchants, are now more accessible and have been adopted as a core component within the e-commerce playbook.

In this post, we’ll be exploring some of the types of pricing experiments businesses run, including some of the key techniques and considerations. Consider this article a guide on various techniques businesses find useful, how learnings can help iterate on future pricing strategies, and some of the questions that need to be answered as you fine-tune your pricing.

What do pricing experiments look like in practice?

Businesses are running a variety of different experiments that would fall under the “price testing” umbrella. Here are a few simple examples we’ve seen among Statsig customers:

Price-testing on individual products: Offering a lower price to your test group

Free or discounted shipping: Offering lower shipping costs to your test group

Promo codes for new users: Present a discount code to new site visitors in test group

Presentation of discounts: Showing slashed MSRP, showing discount %’s

The implementation of such tests is going to vary greatly depending on a customer's technical stack and the specific nuances of their businesses.

We’ve commonly seen these tests configured as simple A/B experiments in Statsig, leveraging dynamic parameters to inform the store web app or back catalog service to apply a different pricing rule.

Statsig's experimentation platform enables businesses to run tests anywhere in their stack, and critically, enables businesses to measure not just clickstream and point-of-sale type metrics, but also metrics derived from joining multiple datasets in the data warehouse, data from offline sources, and cumulative metrics like ROAS (return on ad spend), LTV (Lifetime value) and Retention.

Why is this important?

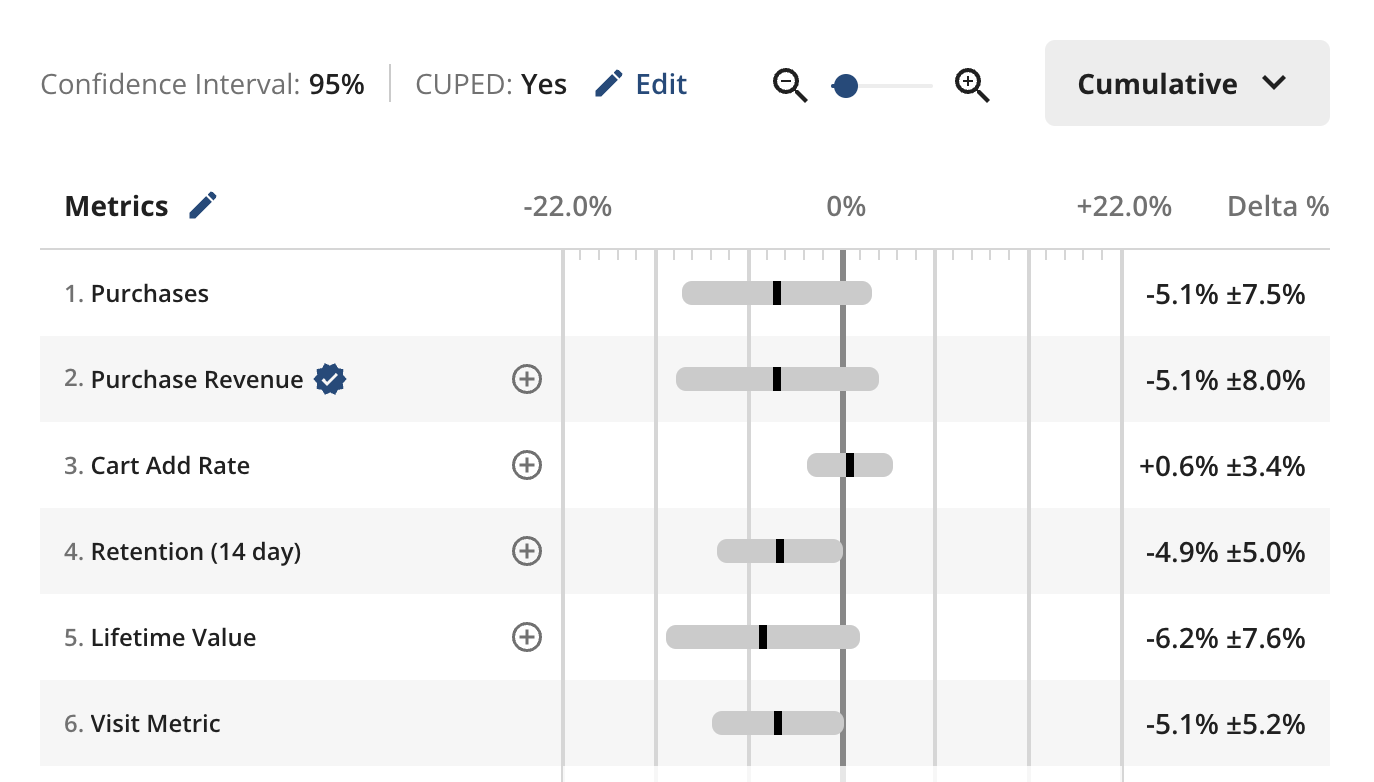

Clickstream style metrics tracked “close to the test” (i.e., add_to_cart, purchase) only tell a fraction of the story. Being able to incorporate more complex metrics and long-term metrics will help complete that picture.

In order to achieve some of these “deeper insights,” you’ll need a platform that supports integrating online and offline data via data warehouse integrations and HTTP API, Identity Resolution (for measuring customers across different identity boundaries), and Holdouts for measuring the long-term impact of pricing experiments.

Join the Slack community

Short pricing trade-offs and longer-term impacts

When delving into pricing and shipping experiments, it's crucial to recognize the potential short-term tradeoffs that can lead to long-term gains in retention and lifetime value.

Reducing the price of an item for users in the test group should lead to higher conversion rates but lower AOVs (which will hopefully offset each other), but the more interesting and true intent of the experiment is to measure how this will impact customer loyalty and retention.

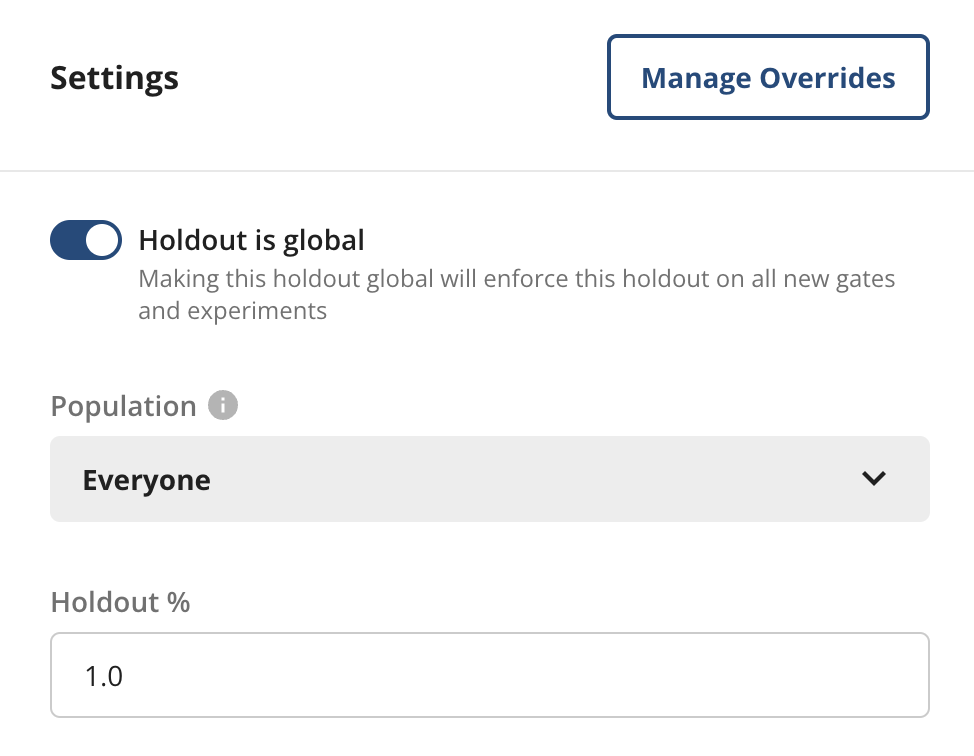

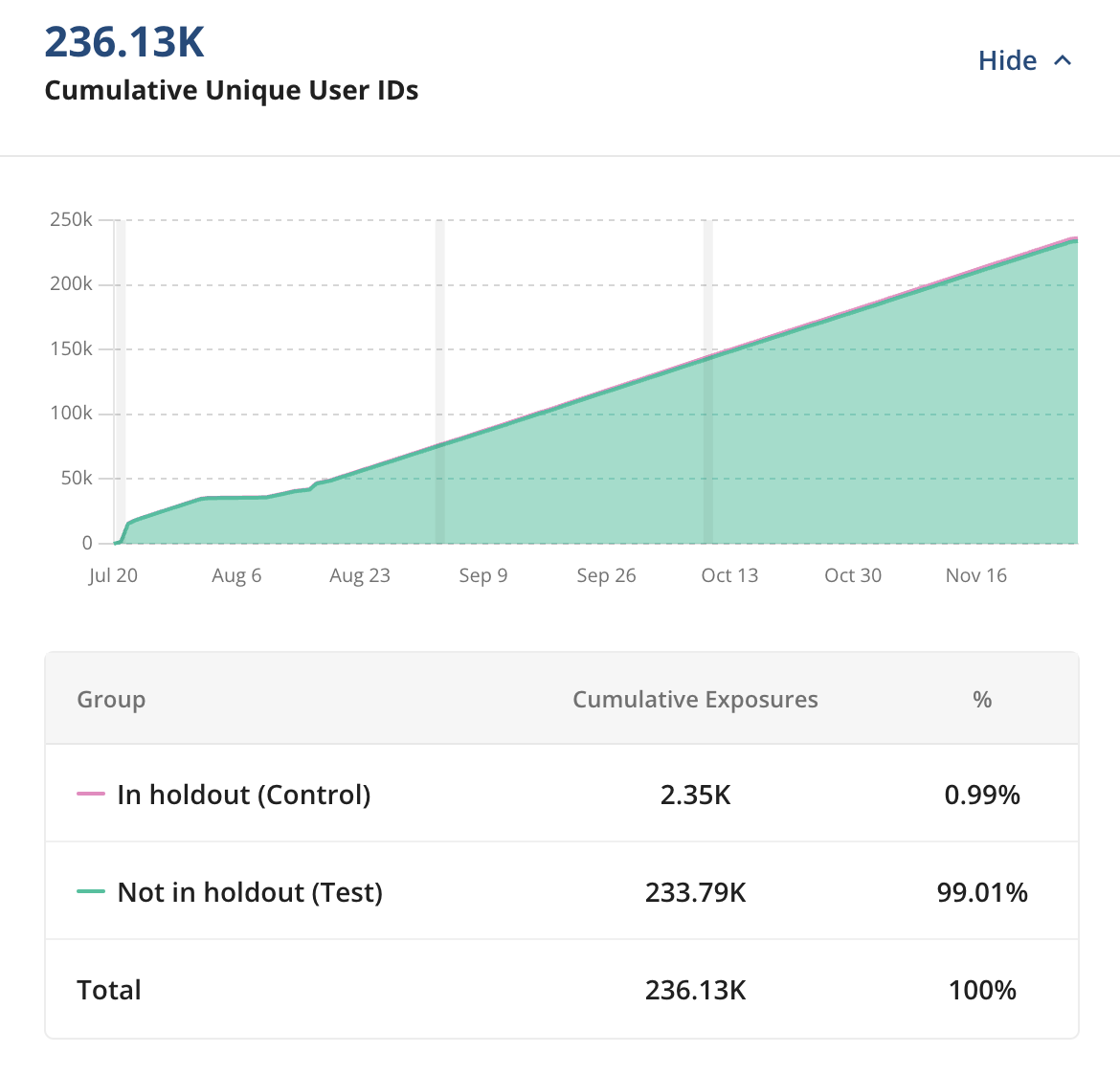

Statsig addresses this challenge with Holdouts, a feature that withholds a small slice of users from all pricing, shipping, and coupon experiments over an extended period.

Additionally, Statsig's Analytics tool provides businesses with sophisticated Analytics (currently referred to as Metrics Explorer) that supports metric drill-downs where you can group analysis by test group long after your experiments have concluded. Insights will also enable businesses to inspect the cumulative, all-time impact of experiments on any of their metrics.

Understanding customer segments

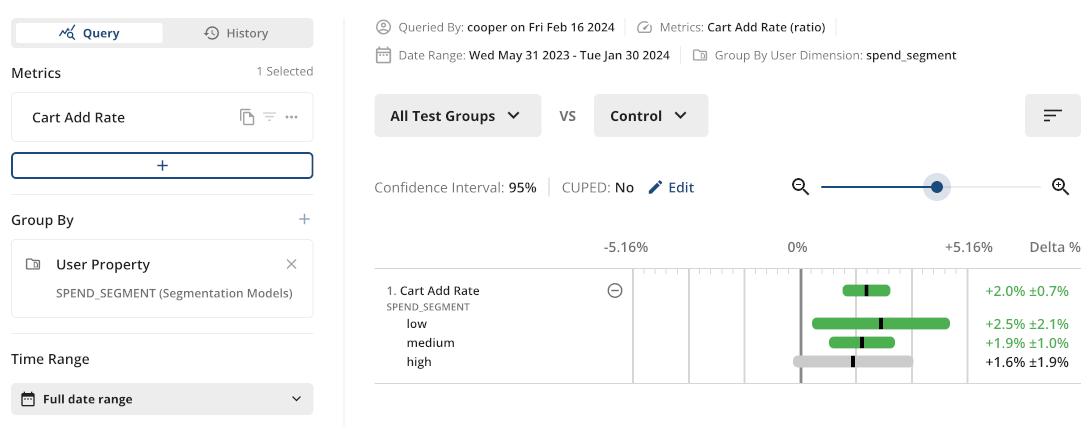

Understanding the varying effects on different types of users is a critical aspect of successful pricing experiments.

Many e-commerce businesses have a well-known segment of users that have wildly different spending behaviors—it’s critical to consider this when configuring a pricing experiment that may result in unintended cannibalization within one segment.

Businesses often segment customers based on their value level and means of acquisition. Statsig's Pulse tools offer the capability to run custom queries to help businesses comprehend the behavior of different user segments. This nuanced approach allows them to tailor future experiments to specific audience characteristics.

Takeaways

While there's no one-size-fits-all solution to price testing, adopting this arsenal of methodologies has resulted in material ROI for Statsig customers. As we know, not every experiment is going to be a major win and may lead to more questions—however, businesses that are rapidly testing using these techniques will gain valuable insights that inform their pricing and shipping models in ways they wouldn't have otherwise been able to infer.

E-commerce businesses that embrace these strategies stand to gain a competitive edge, and it requires a modern platform like Statsig to unlock the full potential of experimentation to help inform and model your pricing strategy to drive customer satisfaction and growth.

Request a demo