Knowing the key intangible traits to look for, and the modern technical capabilities that help deliver successful experimentation, can make all the difference when shopping for a modern experimentation platform.

Intangibles to consider

Sometimes the best markers of a great partnership are the qualities you can’t quantify or assess at a glance. Take it from us, there’s a lot more to experimentation platforms than meets the eye.

Product releases and fixes

Taking a look at a company roadmap is helpful, but a company’s proven ability to execute and deliver enhancements and innovations is not determined by the roadmap alone. Asking for a history of recent major product releases and fixes can help you gauge if this potential provider is right for you.

Any stutter step in response to this is a huge indicator that a company may be stagnant and unable to unblock your business down the road after you’re locked into a contract. This is a huge reason why customers choose Statsig. (And on a personal level, a huge reason why I wanted to work for the company.)

Real support from real people

“Thanks for your inquiry, we’ve received your request and are working to resolve it. We will respond shortly for more information.” Zendesk autoresponders have become the status quo in the SaaS space and cast a shadow over the customer experience due to weeks-long resolution time.

Ask the potential provider who will be assisting you—will it be support agents several layers removed from product developers, or engineers themselves? Will comms be done over email, snail mail, or more real-time in Slack directly?

Press the provider for a conversation with commonly difficult-to-access people (ie Lead Eng or Data Scientists) during the buying process. If this ask can’t be arranged in a reasonable time during the sales cycle, don't expect it to get better post-sale.

Join the Slack community

Ability to run a meaningful PoC

Ask a sales team about the PoC process. What does this look like? What facets of the platform will you be able to test out? What support will you have from the vendor? Over Slack, over Zoom? (Statsig supports PoC customers in any way necessary to communicate effectively).

Ask the provider for example PoC project plans to give you confidence you’ll be steered in a direction that allows you to evaluate the use cases that are important to your business.

Alignment with company personality

Consider the company’s DNA and founding story as a guiding principle to determining how well-equipped a platform provider is to understand and accommodate your use cases and technical requirements.

For instance, Statsig was founded by a group of ex-Facebook product leaders and engineers who used product experimentation practices to build one of the world's most engaging and successful social media platforms.

We have the conviction that Product Experimentation is critical for business success and is a practice that runs deeper than basic feature-flagging & web-testing use cases.

Empowering experimentation

It continues to be vindicated that product experimentation is critical as more and more SaaS platforms move into the space—but it’s important to consider which providers empower experimentation as part of their core business and not just another SKU among other product offerings.

The most successful online companies test across their entire stack and integrate various offline & online data sources for success signals. Some providers have great solutions for web experimentation, but struggle when pressed to support advanced use cases and integration points.

In fact, Client-Side Experimentation practices are becoming less and less effective as made evident by the sunsetting of Google Optimize and seeing some of the traditional web-testing tools moving into the “Fullstack” space. It’s critical that a provider’s composition of employees and their expertise reflect hands-on engineering and data science involvement.

A company lacking deep product experimentation experience in its DNA will manifest in how you’re supported as a customer.

The product and engineering teams at Statsig have hands-on practitioner experience (not just theoretical) in building amazing products through experimentation.

We continue to build our platform using these practices which is readily apparent by how rapidly and reliably we make large-scale product releases, and we empower our customers to become successful.

To that end, we're always delighted to connect prospective Statsig buyers with current customers to attest to this.

Read our customer stories

Technical capabilities

Flexibility in defining Metrics

Metrics should be highly configurable and reusable. At a minimum, a platform should support the ability to filter/distill your metrics based on additional event metadata. Imagine if you wanted to capture Total Sale Items Added To Cart as a metric.

Any modern platform would allow you to use the Add to Cart event as the basis for this and filter on event metadata for {sale_item: true}. Imagine having to define an event with a long descriptive event key in order to achieve this for every new facet you’d like to measure (ie; add_to_cart_sale_item, search_products_electronics)

Unfortunately, this is the case in many platforms, and customers burn many engineering hours adding tracking code for one-off metric definitions and building ugly workarounds (ie an abstraction that constructs long event keys). Statsig offers much more in terms of defining custom metrics that are reusable across all experiments.

Capabilities that map to dev workflows & best practices

Platforms should support multiple environments (dev/stage/test/production) and allow you to easily test and dogfood experiments before releasing to production. Many enterprise organizations want larger oversight and checks and balances when making changes that impact production systems which should be managed by a reviewers workflow.

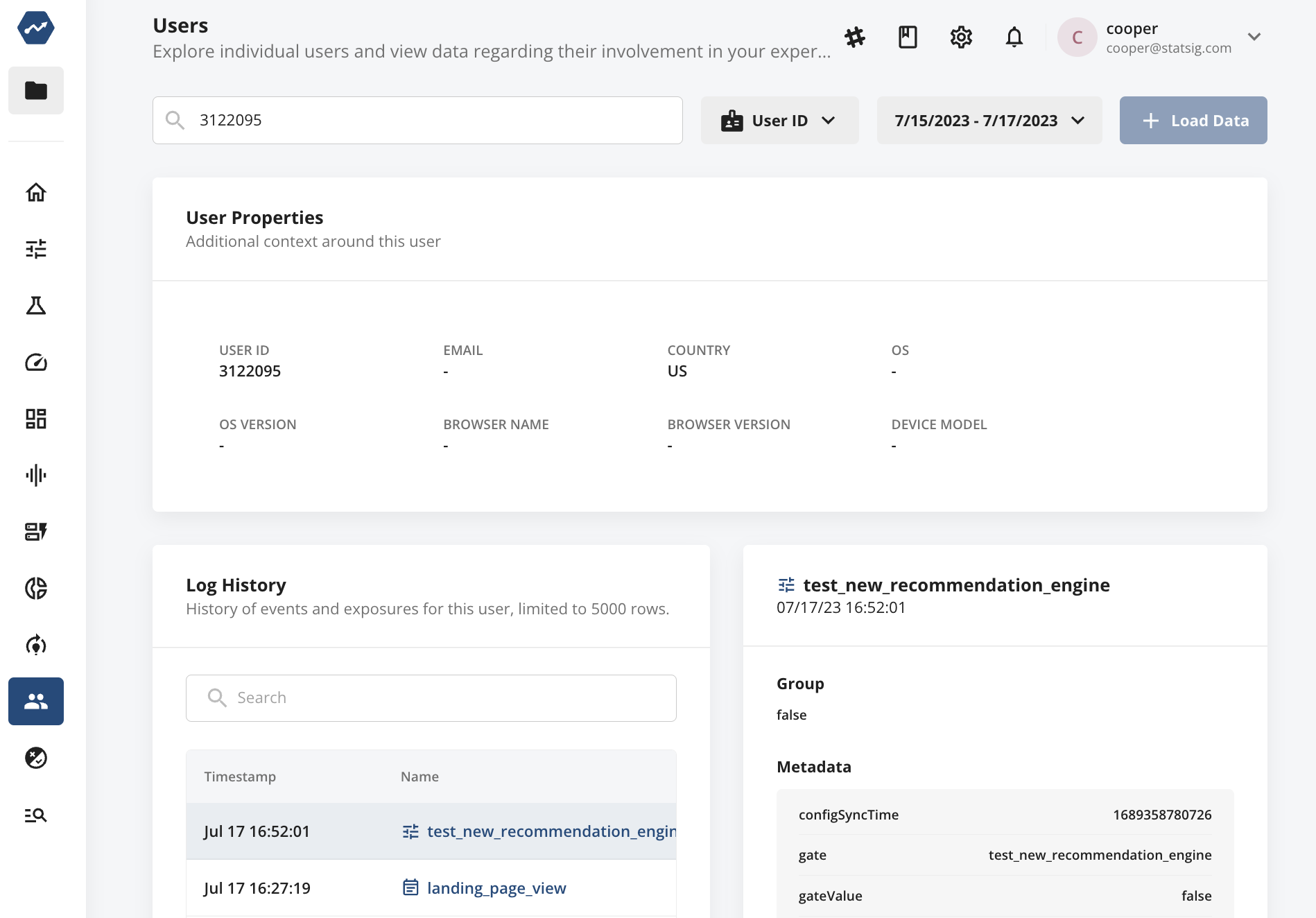

Debugging tools for developers

A modern platform should give developers real-time insight into the activity and data being transmitted into the system.

For example, Statsig offers real-time exposure log streams (to explore and debug “assignment reasons” and all associated user properties), event log streams (showing all associated event metadata and user properties), diagnostics (showing health checks, SRM detection, and user-totals per hour), user search for diagnosing specific user assignments and event history (shown below).

Integrating with a platform that lacks debugging tools will be a painful black-box experience.

Modern stats methods

Businesses running digital experiments should expect that providers’ stats engines can support all of their use cases by implementing the common and advanced stats methods in the market.

Today, Statsig offers CUPED, Sequential testing, Bonferroni Correction, and Winsorization and is continuing to invest in this area, with Switchback testing (planned for release next month) and Bayesian (in beta!)

Data accessibility and reproducible results

Most mature & sophisticated organizations want their data teams to have access to the underlying experiment data.

This requirement is typically to enable deeper analysis offline, joining external datasets, and validating that experiment results are trustworthy. We want our results to be reproducible, and empower data teams to achieve these things by making our data available via export directly via the Console and the REST API.

Don’t take advertised integrations at face value

Many providers will claim to have integrations and partnerships, but you as a buyer will need to look closer to determine what’s real.

Is the integration a productized integration (owned by product teams) or a brittle client-side integration that requires a bunch of custom code (example)? We constantly hear that various providers will overpromise and under-deliver in this area.

We proudly support proper server-to-server integrations, supporting the ability to filter data coming in/out of the platform and typically just require an exchange of API credentials to authorize with the 3rd party platform.

Org structure to map to your products and business units

Many businesses we speak with have requirements for structuring their Statsig organization to meet their needs for supporting multiple business units and products, and to ensure access is governed by granting access to specific resources at the user-project level (not globally).

Performance impact

It’s important to understand what impact an experimentation toolset will have on your application performance.

Ask providers what they’re doing to monitor and ensure performant execution of experiments using their SDKs, and what size footprint their tools and configs will have both on disk and over the network. Providers should be able to supply answers around historical system performance and provide RUM data around request-response latency.

Request a demo