Cloud-hosted Saas versus open-source: Choosing your platform

You’re faced with a dilemma:

Your organization has determined it needs to upgrade to a modern experimentation & analytics platform, but now you’re faced with a big decision. Do you:

Build your own in-house platform

Fork an open-source platform and self-host it

Go buy a cloud solution

This is a conversation I have often, and as a result, I have unique insight into what makes a good decision. In this post, I’ll share some thoughts on how to approach this decision and share some inputs on things you may want to consider.

In-house build vs. off-the-shelf solution

We won’t spend a lot of time in this article re-hashing the build vs. buy comparison. A lot of people (including Statsig) have written long articles on this topic, so we’ll only provide the spark notes version here. Some key things to consider include:

Total Cost of Ownership (TCO): Building in-house requires investment in development, operations & support, and infrastructure. Consider the costs for engineers, data scientists, and infrastructure requirements, including the need for redundancy, resilience, and possibly near-real-time results.

Opportunity Cost: Building in-house may come at the expense of investment in core product features. Compare the functional needs, reliability, scalability, and costs of an in-house solution versus an external service to evaluate which may offer faster innovation.

Assessment and Trial: It’s easy to quickly assess the functionality of existing solutions, relative to your needs today. You can’t “trial” an in-house build until you’ve built it - delaying the time to value significantly.

Many companies look at these considerations and quickly opt to focus on vendor evaluation.

Unless you’re a Netflix, Facebook, Amazon, et al, with dedicated product teams specializing in Data Engineering, Data Science, Experimentation, and all of the other disciplines required to stand up your own testing platform (ie; scaling infrastructure & hosting, event-driven architecture, high throughput event processing, data warehousing)—there is a great likelihood you will be revisiting discussions with a cloud-platform provider in the near future.

We constantly see boomerang customers that come back to revisit Statsig after previously saying they intend on building.

If you’re in that boat, then you face the next decision: buy a hosted solution, or self-host open-source software.

There’s no such thing as free

Fork an open-source repo for free, put it on some servers, and you’re off to the races!

While this sounds tempting, anyone that has spent time in technology knows this vision is far from reality. The idea of open-source software obscures a number of serious costs: Engineering Maintenance, Infrastructure, Data processing/storage, Time, and Opportunity Costs.

When we’ve talked to users of open-source feature management or experimentation platforms, we’ve heard two common themes: significant, unexpected opportunity costs and unexpected scaling issues.

Join the Slack community

The opportunity cost of open source

Do you have, ostensibly 1 year’s time to forgo the benefits of experimentation while your DevOps and Engineering teams host, scale, configure and integrate an open-source solution?

Statsig prevents Product, Engineering, and Data teams from having to spend thousands of hours building and maintaining a duct-taped proprietary experimentation and analytics stack. The same is true when considering hosting an open-source solution—your engineering teams will not be focused on building core product.

If you’re at a company where engineering resources are plentiful and the product is 100% complete and in maintenance mode, then maybe you are a candidate for the open-source approach—but that company doesn’t exist.

At a minimum, it takes several months to stand up an OSS experimentation platform at scale in production and operationalize it across the business, meeting all technical and business needs.

Several months is an ambitious target. You’ll see industry numbers that claim average implementation + adoption times of Cloud-hosted SaaS platforms range between 3-18 months—for a platform that is already hosted.

Now imagine if you had to host, scale, and maintain that implementation.

Hosting, scaling, and maintaining, often falls to the busiest people at your company: Key product and data engineers who understand the breadth of the product well enough to define relevant metrics, and who have the technical expertise to get up to speed across data/infra problems in a new code base.

Do you want those people working on internal tools, or improving your product? We’d bet the answer is the latter, especially if you’re a small company.

Follow Statsig on Linkedin

The reality of self-hosting a high-throughput system

Hosting [Testing Platform] at scale is complex. With our Kubernetes users, we've seen issues crop up in every part of the stack. In event ingestion, Kafka, ClickHouse, Postgres, Redis and within the application itself. [...] Often the issue is something a couple of layers deep and very hard to debug, involving long calls with expensive engineers on both sides. Even something as simple as a full disk would cause their instance of [Testing Platform] to be down for hours or days.

This isn’t me talking, this is the CTO of a self-hosted testing platform discussing issues that self-hosted customers faced with their earlier self-hosted package which has since been sunset.

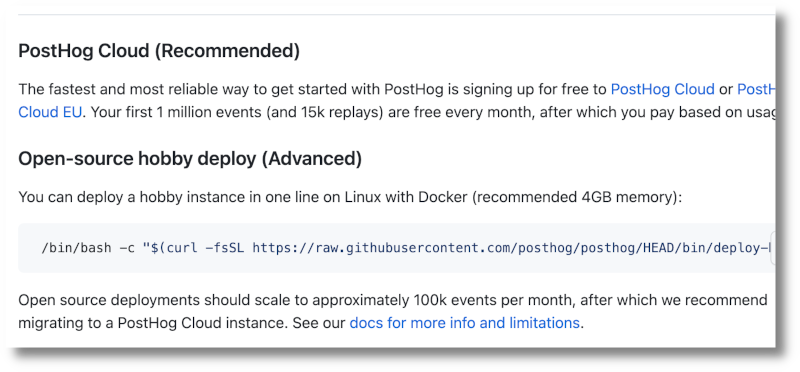

The challenges of hosting & scaling high-throughput systems are real. These platforms even describe their self-hosted solutions as “Hobbyist” and warn that they only scale to around 100k events per month. Further, they encourage the use of the Cloud-hosted version with a link to their pricing page.

The challenges of self-hosting span the entire spectrum of technical issues—including miscellaneous bugs, fatal crashes, DNS, Networking, API, SSL cert, Docker, Kafka, database issues, and other complex infra & deploy pipeline issues that I’m not smart enough to fully summarize.

Still don’t believe me? That’s okay, I will unabashedly share some direct links to GitHub issues for such open-source projects like this and this one too. Really puts “free” into perspective. 😬

Deployments of a self-hosted solution will look very different across the board, so getting specific answers to that unique challenge you maybe be facing regarding docker builds or host operating system compatibility issues may prove futile.

Sure, you will have the community to support you in resolving issues—but they’re not contractually bound to resolving system issues by an Enterprise SLA offered by most Cloud-providers.

At the end of the day, if you invest time and effort in setting up a tool, you should know it’s going to continue to work as your company scales. There’s nothing worse than investing valuable engineering time in setting up a tool, then having it break when you’re successful.

⚠️ So be advised, attempting to roll out a self-hosted open-source platform as your experimentation platform will prove to be more costly in a number of ways and should truly be left to the ‘hobbyists.’ If you’re trying to build a successful digital business, choosing a cloud-hosted provider will always be the more cost-effective path in the long run and will accelerate time to value at the start.

Request a demo